ADBC connectivity : faster columnar storage query

Hello all,

ADBC is a database connection standard (like ODBC or JDBC) but specifically designed for columnar storage (so database like DuckDB, Clickhouse, MonetDB, Vertica...). This is typically the kind of stuff that can make Dataiku way faster.

more info in

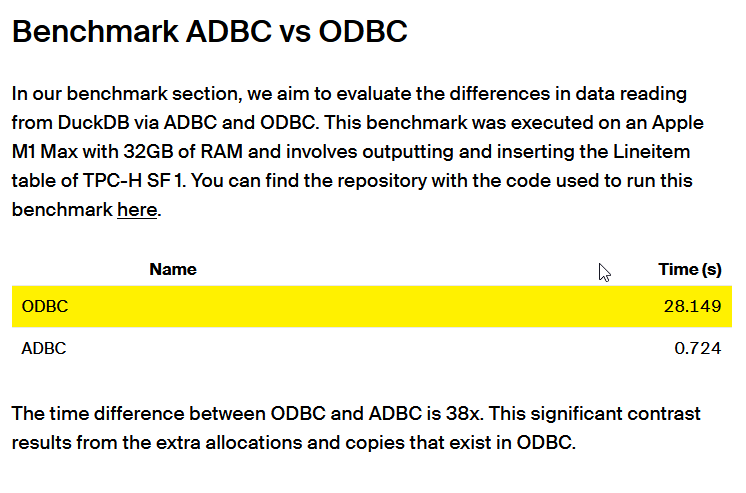

Here a benchmark made by the guys at DuckDB : 38x improvement

Best regards,

Simon

Comments

-

Turribeach Dataiku DSS Core Designer, Neuron, Dataiku DSS Adv Designer, Registered, Neuron 2023, Circle Member Posts: 2,664 Neuron

Turribeach Dataiku DSS Core Designer, Neuron, Dataiku DSS Adv Designer, Registered, Neuron 2023, Circle Member Posts: 2,664 NeuronSo several things on this one. First of all on paper this looks like a good idea but when you dig down it doesn't look so good. In the first link you added at the end of this post you find this statement:

"ADBC doesn’t intend to replace JDBC or ODBC in general. But for applications that just want bulk columnar data access, ADBC lets them avoid data conversion overhead and tedious integration work."

And this one:

"JDBC is row-oriented"

This is the main problem with your idea. Not only ADBC is not meant to replace JDBC, which Dataiku uses, but also Dataiku is been designed from the ground up to a row-oriented application. So introducing columnar datasets will require major reengineering.

Then the second main issue with your idea is where will the gains of using columnar datasets be obtained from? If you want to use Arrow or ADBC you can already do so using Python directly. And you can already benefit from huge computation benefits of storing data in columnar databases by storing your data in Databricks, Snowflake or BigQuery to name a few. In fact Dataiku and Databricks can already been integrated in such way that you push down all your compute needs either code recipes or visual recipes. So all the benefits are there for you to take them already.

-

@Turribeach I may have been not clear : the performance gain is when you import/extract data from a columnar database. Let's say I want to extract data from Apache Arrow and write it into a BigQuery after a few transform on Dataiku. Basically, we have Apache Arrow⇒Export to Dataiku DSS⇒Transform in Dataiku DSS⇒Export to GCP. This is on the first step we can take advantage of ADBC and the reason why I want it on Dataiku. It doesn't require any change in DSS internal structure and it's neither a new engine nor a "in-database" feature.

Best regards,

Simon -

Turribeach Dataiku DSS Core Designer, Neuron, Dataiku DSS Adv Designer, Registered, Neuron 2023, Circle Member Posts: 2,664 Neuron

Turribeach Dataiku DSS Core Designer, Neuron, Dataiku DSS Adv Designer, Registered, Neuron 2023, Circle Member Posts: 2,664 NeuronThis is exactly my point. You can already achieve performance in your import/extract data from a columnar database to another by using pure Python code and you can even run that inside Dataiku as a Python recipe. You will never get the best performance when you pivot the data to Dataiku datasets as you will be forced to use JDBC to write the dataset. And as your own link stated ADBC is not meant to be used as a JDBC replacement which is what you are effectively asking.

In v13 Dataiku added support for Pandas 2.x and while they don't natively support the PyArrow backend nothing stops you from using it inside a Dataiku Python recipe to read your data directly from files, you just can't persist the data in this format but you can avoid that by again using files in a local file system as your output.

For your idea to really make any sense Dataiku will need to support storing data in columnar format and change all the recipes to be able to handle that. That's a huge undertaking which seems to have little ROI given that you can already use push down recipes (ie SQL Engine) to obtain the benefits of columnar database performance without having to move the data inside Dataiku. The only situation where this doesn't work is on some preprocessors in the Prepare recipe and on Python recipes which of course need to load the data in memory to work. In a lot of cases you can leave these steps to a later part of the flow once the data has aggregated/reduced so the impact of having to load it in memory and use JDBC is much lower.Now to your specific use case (Apache Arrow⇒Export to Dataiku DSS⇒Transform in Dataiku DSS⇒Export to BigQuery) the best performance would be doing this: Apache Arrow⇒ Parquet ⇒ GCP Bucket ⇒ GCP BigQuery ⇒Transform in Dataiku using pushdown SQL engine recipes leaving the data in BigQuery. Putting Dataiku in the middle of your data moves will slow down your process massively and I even doubt that adding ADBC would solve that.

Ultimately the whole point I am trying to make is that if the main reason you want ADBC support is for performance you will be much better off using a columnar database as a Dataiku backing store and avoiding as much as possible moving the data into memory by using push down recipes. No matter how fast you can tune your IO it will never be as fast as leaving the data where it is and doing the compute there.