Sign up to take part

Registered users can ask their own questions, contribute to discussions, and be part of the Community!

Registered users can ask their own questions, contribute to discussions, and be part of the Community!

The third Conundrum is here. Pick your brains with this one!

Today is February 28, 2017. You are given a data-set (attached) with the order date and whether or not it is the customer’s first ever order* from Haiku T-Shirts.

Can you find the number of unique customers in each of the following cohorts?

Cohort A: All customers whose first order was in the last 30 days.

Cohort B: All customers whose first order was in the last 60 days who are not part of Cohort A.

Cohort C: All customers whose first order was in the last 90 days who are not part of Cohort A or B.

If you would like to share your answer please do export your project and upload it here! Refer to our Submission Guidelines to see how to properly export your project.

*order_number will tell you if it's their first order!

Hi Michael, thanks for this new conundrum!

I couldn't find any column containing the information whether or not it was the customer's first ever order. Am I missing something? Or maybe we have all the logs since the creation of Haiku T-Shirts and in this case we could build this column ourselves?

Hi! Thanks for working on this puzzler! Looks like you may have already figured this one out...for information about whether or not it is the customer's first order, check out order_number. Happy conundrumming!

I based my analysis on the assumption that the dataset contained all the logs since the beginning of Haiku T-shirt.

In order to determine when was the first order of each customer, I used a window recipe where I partitioned by customer_id, ordered by ascending order_date and used the rank aggregation. By doing so, all the rows for which the rank was equal to one corresponded to a first order. I then filtered all the other rows out.

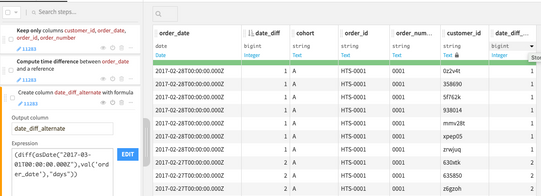

Finally, I created a column giving the number of days between the order date and 'today' (2017-02-28). I then created a column indicating the cohort each customer belonged to using a formula.

I made a bar chart with the count of records by level of cohort (filtering out the rows for which customers didn't belong to cohort A, B or C). As we only kept first orders, customers are already unique in our prepared dataset. We can also get the information of the number of unique customers by cohort using the 'analyze' option on our cohort column. We got 138, 133 and 182 unique customers for cohorts A, B and C respectively.

I hope I helped! 🙂

@anita-clmnt I got slightly different numbers, which I confirmed using multiple methods. I'm attaching the project in case you're interested.

This project finds the answer for the Community Conundrum using two different methods.

Using the visual recipes, there are two distinct steps. The first visual recipe is a Windows recipe that finds the first order using customer_id to create a window and find the first order date for each one.

The second is a Prepare recipe that does the following:

The total number in all cohorts is 606. Here is how the cohorts break down:

The Python recipe goes through the same steps using the pandas and dateime packages. The output is a DSS dataset with the same numbers.

Hi @YashasV , thanks for commenting! 🙂

We indeed didn't use the same strategy and I found out where the difference was coming from.

If we use your strategy, we don't remove what I would call 'first order duplicates' by customer_id. If you keep only the rows for which customer_number==0001 and then group by customer_id and count the number of rows, you'll see that for many customer_id have multiple first orders. I took a screenshot of th result, the first rows are displayed below.

So, for me, it would make more sense to keep the oldest 'first order' only as Michael asked for unique customers. This is what my partition strategy does. But this is my own interpretation of the conundrum!

What's your opinion on that?

-Anita

@anita-clmnt , you’re welcome. Yes, you are indeed right about the first order duplicates. I had a look through your project. It seems the different approaches would end up at a similar answer, if DSS had created the chart from the entire dataset instead of a sample. Here is what the chart looks like, when I did that.

Also, I had counted the days from Mar-01, 2017. As such, the last 90 days included Feb-28 and left out an earlier day, leading to a few less customers in the cohorts (606 vs 614). Either way, the numbers are pretty similar.

I’ve incorporated your finding about the duplicates in my project as well. Let me know if you have any questions!

@YashasV ,

Thanks for reminding me that plots are drawn from the sample! I started using Dataiku DSS only few weeks ago and I'm clearly still missing some subtleties to the platform! 😉

Why did you decide to count from March 1 instead of Feb 28?

-Anita