How to convert Visual Recipes to Code Recipes?

I have a Dataiku project hand-overed from others which built via visual recipes. As I'm used to use python for implementation / maintenance, I would like to convert the project to code recipes, does Dataiku provide quick conversion for this scenario?

Operating system used: Windows

Answers

-

Turribeach Dataiku DSS Core Designer, Neuron, Dataiku DSS Adv Designer, Registered, Neuron 2023, Circle Member Posts: 2,664 Neuron

Turribeach Dataiku DSS Core Designer, Neuron, Dataiku DSS Adv Designer, Registered, Neuron 2023, Circle Member Posts: 2,664 Neuron -

Hi Neville,

I hope that you are doing well.

This is indeed not a current possibility for visual recipe. However I would love to hear more about your need to convert visual recipe to python recipe here:)

Visual recipes are meant to facilitate usage to non technical users and hence are not meant to be converted to python. Is the goal here to further customize a visual recipe here/what would be your exact use case here?

We would love to hear more about your experience.

Best regards,

Yasmine

-

tgb417 Dataiku DSS Core Designer, Dataiku DSS & SQL, Dataiku DSS ML Practitioner, Dataiku DSS Core Concepts, Neuron 2020, Neuron, Registered, Dataiku Frontrunner Awards 2021 Finalist, Neuron 2021, Neuron 2022, Frontrunner 2022 Finalist, Frontrunner 2022 Winner, Dataiku Frontrunner Awards 2021 Participant, Frontrunner 2022 Participant, Neuron 2023 Posts: 1,642 Neuron

tgb417 Dataiku DSS Core Designer, Dataiku DSS & SQL, Dataiku DSS ML Practitioner, Dataiku DSS Core Concepts, Neuron 2020, Neuron, Registered, Dataiku Frontrunner Awards 2021 Finalist, Neuron 2021, Neuron 2022, Frontrunner 2022 Finalist, Frontrunner 2022 Winner, Dataiku Frontrunner Awards 2021 Participant, Frontrunner 2022 Participant, Neuron 2023 Posts: 1,642 NeuronAlthough conversation to Python is not broadly analiable. You can convert visual ML models to python directly. You can also convert many of the visual recipes into SQL code recipes. I don’t know if that would be helpful to you. This has been helpful to me from time to time.

-

Neville Qi Dataiku DSS Core Designer, Dataiku DSS ML Practitioner, Dataiku DSS Adv Designer, Registered Posts: 7 ✭✭✭

Neville Qi Dataiku DSS Core Designer, Dataiku DSS ML Practitioner, Dataiku DSS Adv Designer, Registered Posts: 7 ✭✭✭Thanks Yasmine.

For our scenarios, Dataiku is used to build automated data flows, hence Dataiku project is only using fundamental yellow-colored visual recipes (like prepare / group) to do the data massage and ETL. No ML recipes or other advanced recipes are used. I assume Dataiku will transfer those recipes to coding in the backend to execute the flow.

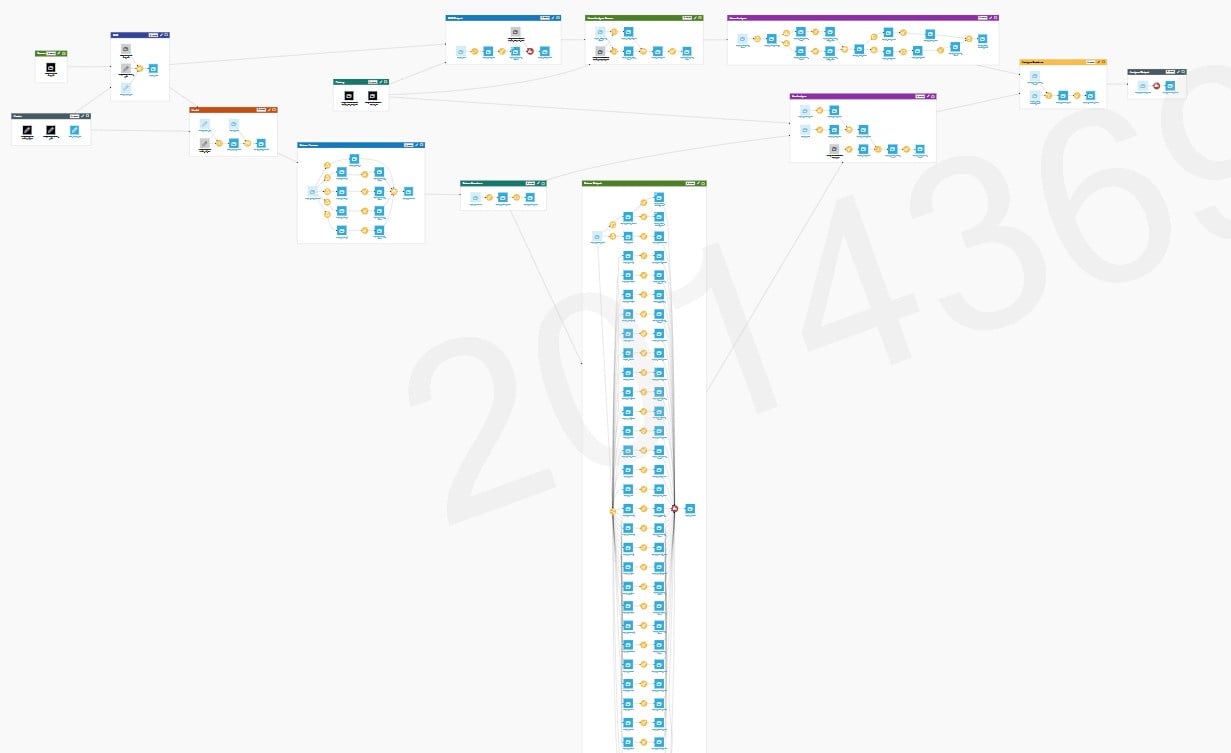

As you can see in the screenshot, the entire flow is super complicated and very hard to maintain from a python code user's perspective. That's why I seek if there's any approach which can quickly convert the entire flow to python code.

-

Turribeach Dataiku DSS Core Designer, Neuron, Dataiku DSS Adv Designer, Registered, Neuron 2023, Circle Member Posts: 2,664 Neuron

Turribeach Dataiku DSS Core Designer, Neuron, Dataiku DSS Adv Designer, Registered, Neuron 2023, Circle Member Posts: 2,664 NeuronDataiku's strongest USP is that it can be used by "clickers" and "coders", meaning people that know Data Engineering / Machine Learning (aka Data Scientists) and those that don't. Having the flow persist every intermediate dataset supports that vision. It's easy to see the transformations visually when you can see what the output of each step is and breaking complex data pipelines in steps makes it much easier to understand. Visual recipes also provide a zero code approach that anyone can easily understand, not just Python Developers. The other thing you get by persisting the intermediate results is traceability and debug capability. Try to figure out the issue with a complex Python recipe with functions with just the inputs and outputs is way more complex than debugging it step by step. You will remove this capability if you move to Python recipes. Then it's the performance angle. Visual recipes will normally be able to ush down the compute to the data layer and be able to handle billions of rows in seconds with technologies like Databricks. Using Python code recipes means all the data needs to be first be loaded in memory before you can process it. So in general visual recipes can provide a massive performance benefit over code recipes unless you use Kubernetes or other parallel compute capabilities.

While I can understand that you being a Python developer prefer to see everything in Python the reality of this world is that most people would prefer visual recipes. I disagree that the "entire flow is super complicated and very hard to maintain from a python code user's perspective". If you had the equivalent flow in a single Python recipe and you give the flow version vs the Python recipe version to a Python developer, which version do you think the Python developer will be able to understand, change and debug faster? I am a Python developer too and I would say the visual recipe version is much easier to understand, change and debug. People code in a million different ways and it's usually harder to understand what the code does when someone wrote the code.In most ML use cases your bigger costs are people since the people that work on Dataiku projects will tend to be high value resources (Data Scientists, SMEs, BAs, Experts, PMs, Coders, Business Users) and a lot of these people are not Python developers. So making things more complex to understand and debug by non-coders doesn't seem like a reasonable trade off just to keep Python Developers happy.

At the end of the day Dataiku gives you the choice of using code or visual recipes so that's what's ultimately great about it. I wouldn't convert an existing flow from visual to code recipes unless it provided significant performance benefits, which in most cases it won't.

PS: Dataiku is not an ETL and while it can do ETL it's not really the best tool at that. the nature of most ML projects means that ETL is usually needed as part of developing an ML use case hence why Dataiku has to have the capability. But I would encounrage you to maybe look at moving Dataiku ETL flows upstream to a more suitable ETL platform as you will not be getting the best value out of your Dataiku license otherwise. It's not a mistake to start with an ETL being in Dataiku while you are developing an ML use case but once the use case proves valuable it might be more efficient to move the ETL part outside of Dataiku.