When importing datasets with columns stored as int, DSS seems to want to store them as double.

Using wip_discrete_jobs as an example we have columns that should be integers but are ingested as numeric.

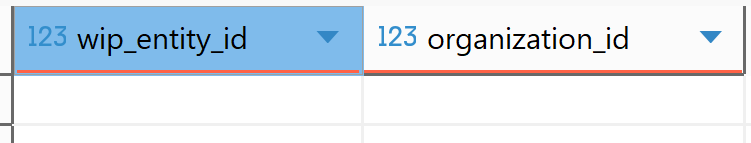

For example,

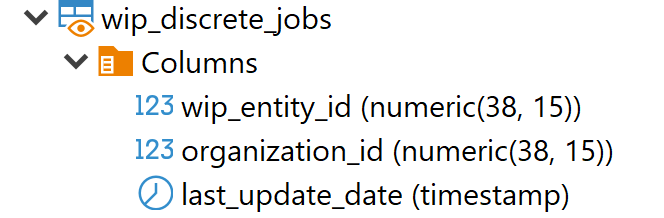

Table: wip_discrete_jobs

Column: wip_entity_id

Type: numeric(38,15)

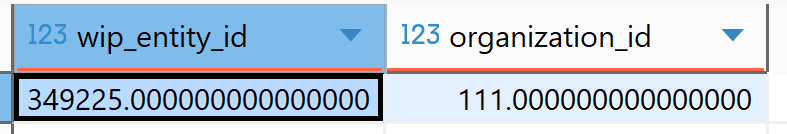

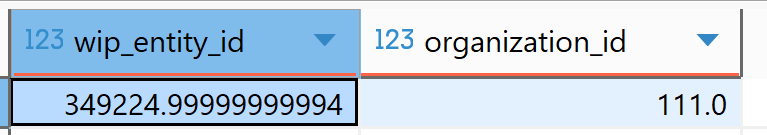

If I query the table

SELECT wip_entity_id, organization_id FROM khw_eng.wip_discrete_jobs WHERE wip_entity_id = 34922

The result is as expected with a scale of 15:

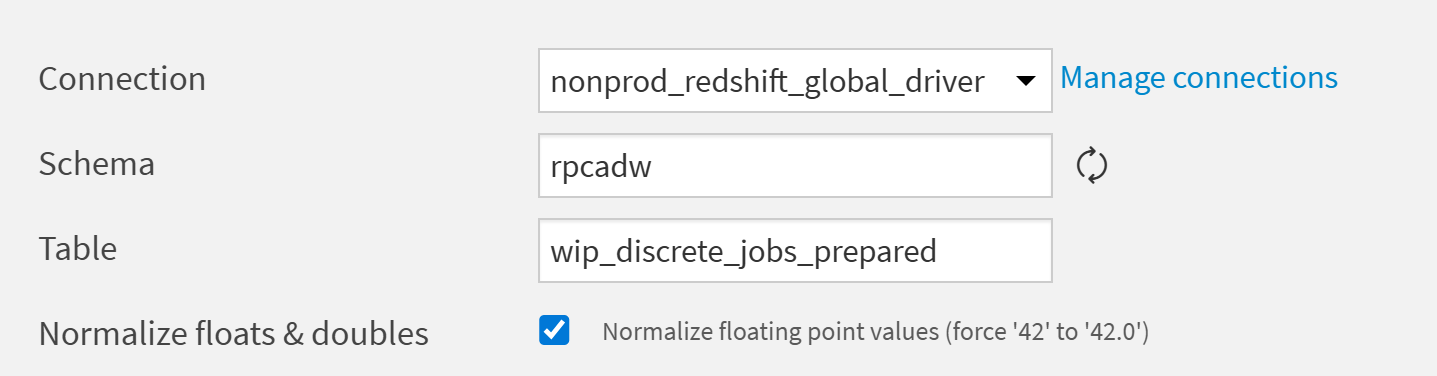

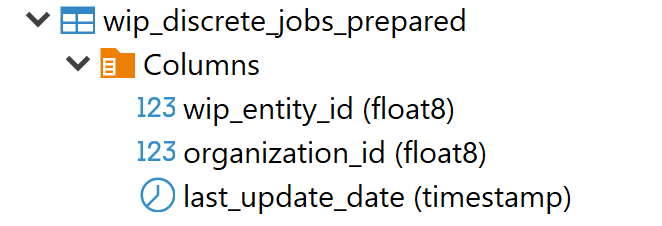

If I take this table as an input and do a data prepare step without adding any transformations, I end up with different column type.

Schema: rpcadw

Table: wip_discrete_jobs_prepared

Column: wip_entity_id

Type: float8

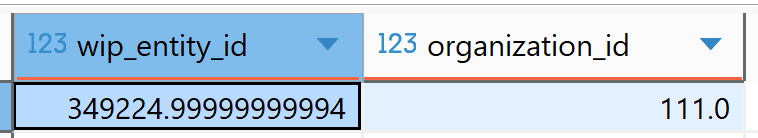

If I query the table I end up NULL result:

SELECT wip_entity_id, organization_id FROM rpcadw.wip_discrete_jobs_prepared WHERE wip_entity_id = 349225

To return the result I must round instead:

SELECT wip_entity_id, organization_id FROM rpcadw.wip_discrete_jobs_prepared WHERE round(wip_entity_id) = 349225

We can see that the conversion to float by datiaku has not preserved the true value.

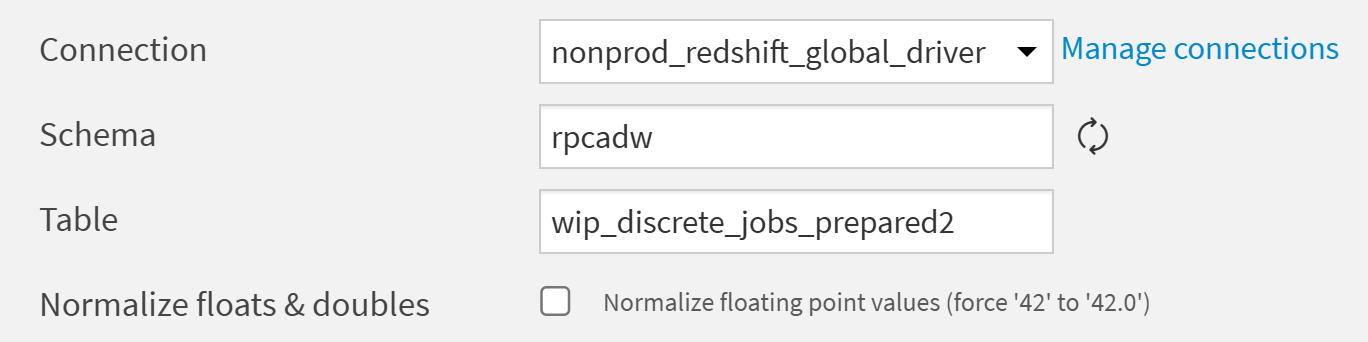

I also tried not normalizing float/doubles:

But we have the same issue:

SELECT wip_entity_id, organization_id FROM rpcadw.wip_discrete_jobs_prepared2 WHERE round(wip_entity_id) = 349225

Answers

-

Alexandru Dataiker, Dataiku DSS Core Designer, Dataiku DSS ML Practitioner, Dataiku DSS Adv Designer, Registered Posts: 1,400 Dataiker

Alexandru Dataiker, Dataiku DSS Core Designer, Dataiku DSS ML Practitioner, Dataiku DSS Adv Designer, Registered Posts: 1,400 DataikerHi,

It's probably best if we share a job diagnostics via a support ticket so we can investigate this in more detail.

The behavior here can vary depending on the settings at the instance, see "Lock strongly typed input":

If the column is being changed, e.g, renamed, or any processing is performed on it, DSS may infer it again and switch to double.

If you only see the issue after writing but not during the preview of prepare, it may be due to DSS and redshift behavior. Double type to Float8 in RedshiftYou could potentially treating it as a string by setting it to type in the prepare recipe, or you can modify the SQL creation statement in the advanced tab of the recipe to set it to numeric

Thanks