SQL Compilation for Date-Partitioned Datasets for recipes that require all Partitions

Hi, this is my first time working with partitions so please let me know if I'm making a mistake.

I have partitioned various datasets by a date column (set partition timeframe to DAY) and have set things up so that my scheduled scenarios for initial recipes are only doing transformations on the latest few days worth of data in order to reduce computation.

There is a point in my flow however, where a recipe depends on the full dataset (all the partitions by day). (This is a group recipe that makes aggregates for each customer across the full timeframe of sales).

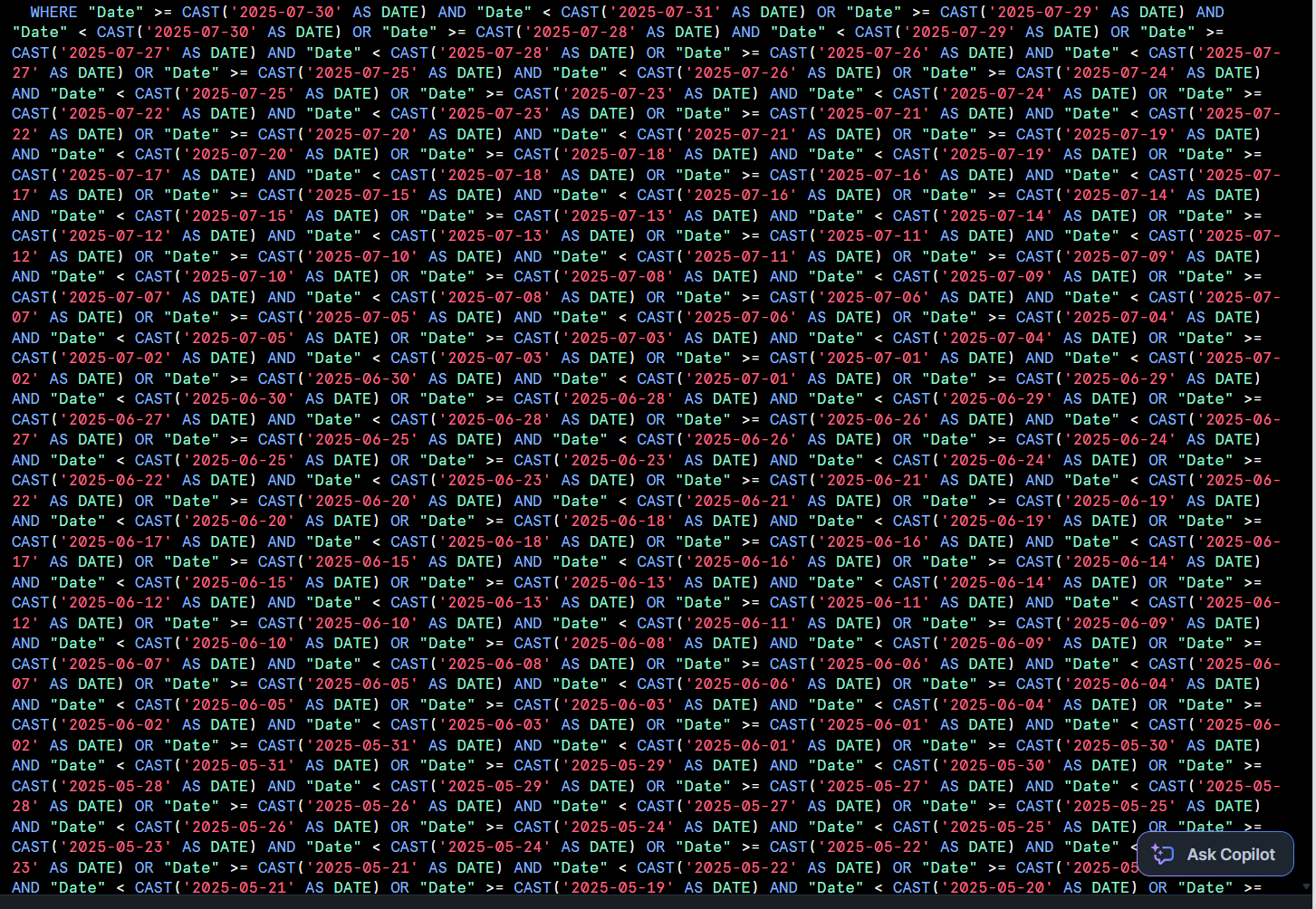

When in the visual recipe, I get the option to choose which input partitions are required to build the output dataset. Regardless if I select 'all available', or do a custom date range encompassing the earliest point in my data until now, the SQL that is being executed looks like this:

This is clearly super inefficient and takes much longer to run. The workaround I have implemented is to just switch to a SQL recipe and remove the date range condition in the WHERE clause. I was just wondering if I am missing a trick or misunderstanding something.

Thanks.

Answers

-

Alexandru Dataiker, Dataiku DSS Core Designer, Dataiku DSS ML Practitioner, Dataiku DSS Adv Designer, Registered Posts: 1,400 Dataiker

Alexandru Dataiker, Dataiku DSS Core Designer, Dataiku DSS ML Practitioner, Dataiku DSS Adv Designer, Registered Posts: 1,400 DataikerHi,

If you want to avoid any overhead (e.g., duplicate the data) and need to interact with all partitions of an SQL dataset, one way to do this is to create an empty Python recipe ( which does nothing) where the output dataset is manually set to the same table as the input but not partitioned. Then, you can continue with your group by recipe on your full data.

There is no underlying difference in a partitioned SQL table other than how DSS interacts with that dataset.