Rapid RAG Prototyping with Ease

As Generative AI captures attention across industries, Retrieval-Augmented Generation (RAG) use cases have become essential to ground responses in real data. One of the challenges with RAG is gathering the right amount of evidence to return the correct answer to the end user. Dataiku makes it easy to iterate through the transparent building and, more importantly, the testing process before rolling out to a larger audience.

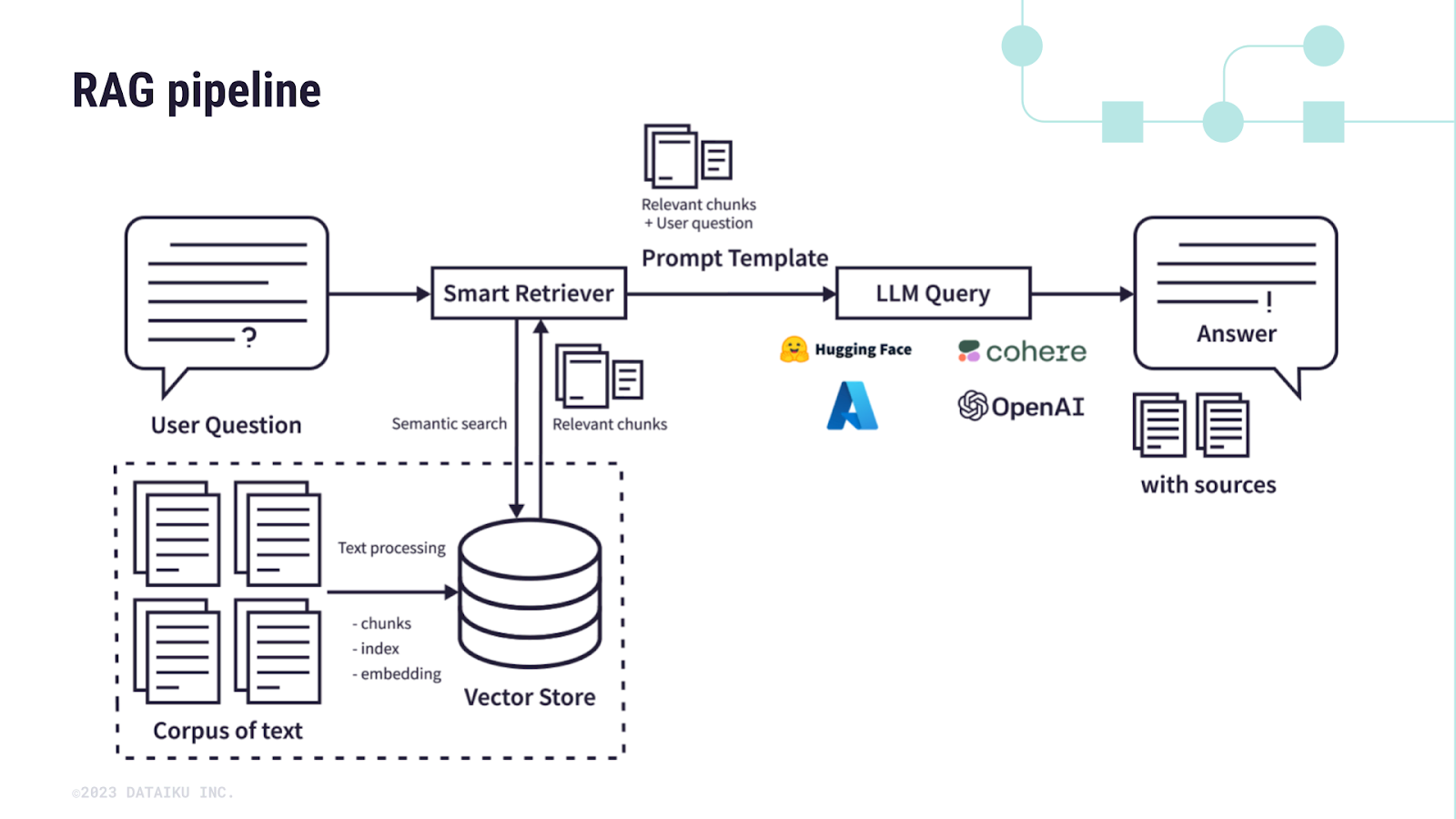

RAG Basics

At its core, RAG systems attempt to grab context and feed it into the LLM to help formulate the answer. This means that responses are fluent and tied to specific sources. For enterprises, this ensures answers are trustworthy, explainable, and reflect valid internal knowledge. The process begins with embeddings, which are vector representations of text that capture semantic meaning. A company’s knowledge artifacts (documents, FAQs, reports) get transformed into embeddings and stored in a vector database. When a user asks a question, the system retrieves the most relevant content and passes it along with the query to the LLM. The model then generates an answer enriched by factual evidence.

No-Code RAG

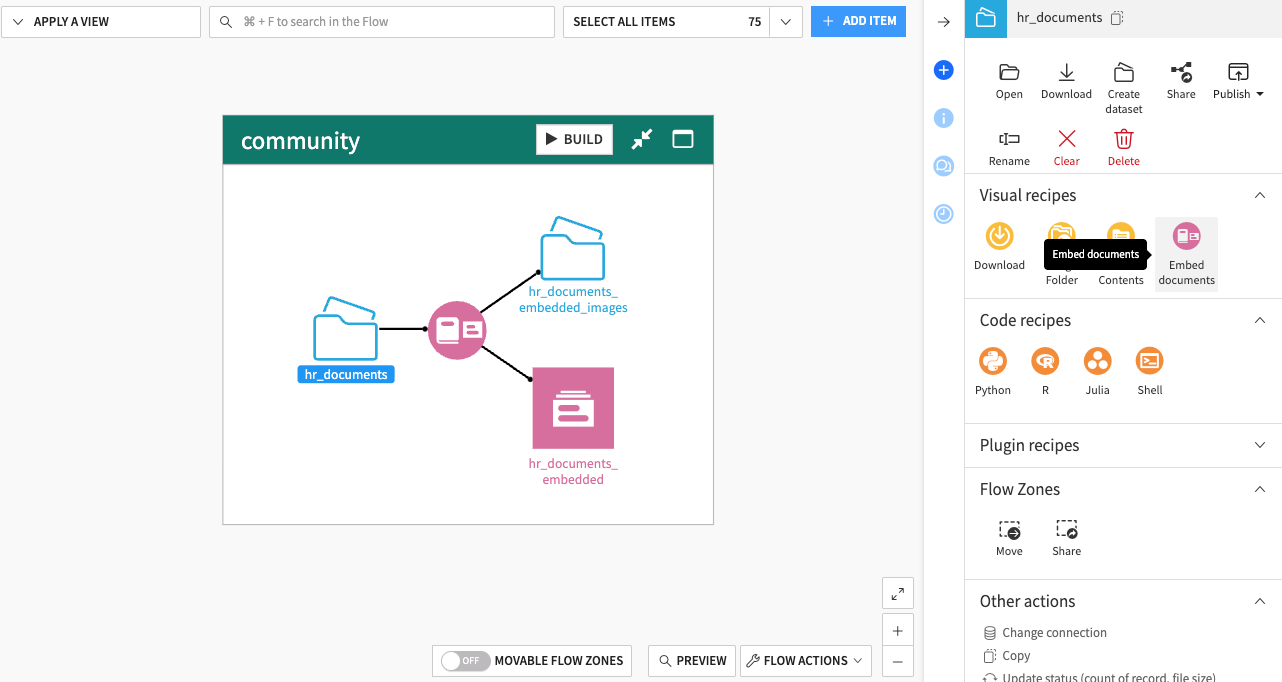

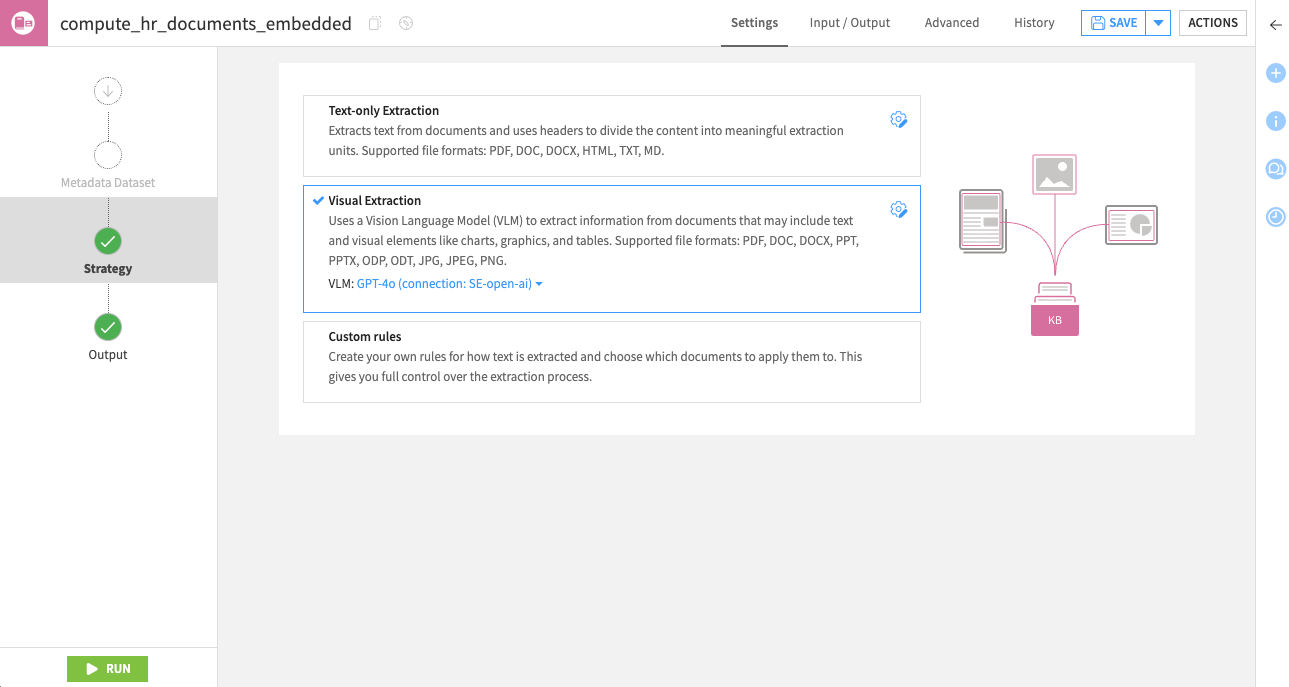

For starters, Dataiku integrates RAG directly into the platform. Users can embed their data through the Embed Documents Recipe. These files often start within a managed folder, which can be local to the Dataiku file system or point to a blob storage location, such as S3, Azure Blob Storage, or Google Cloud Storage.

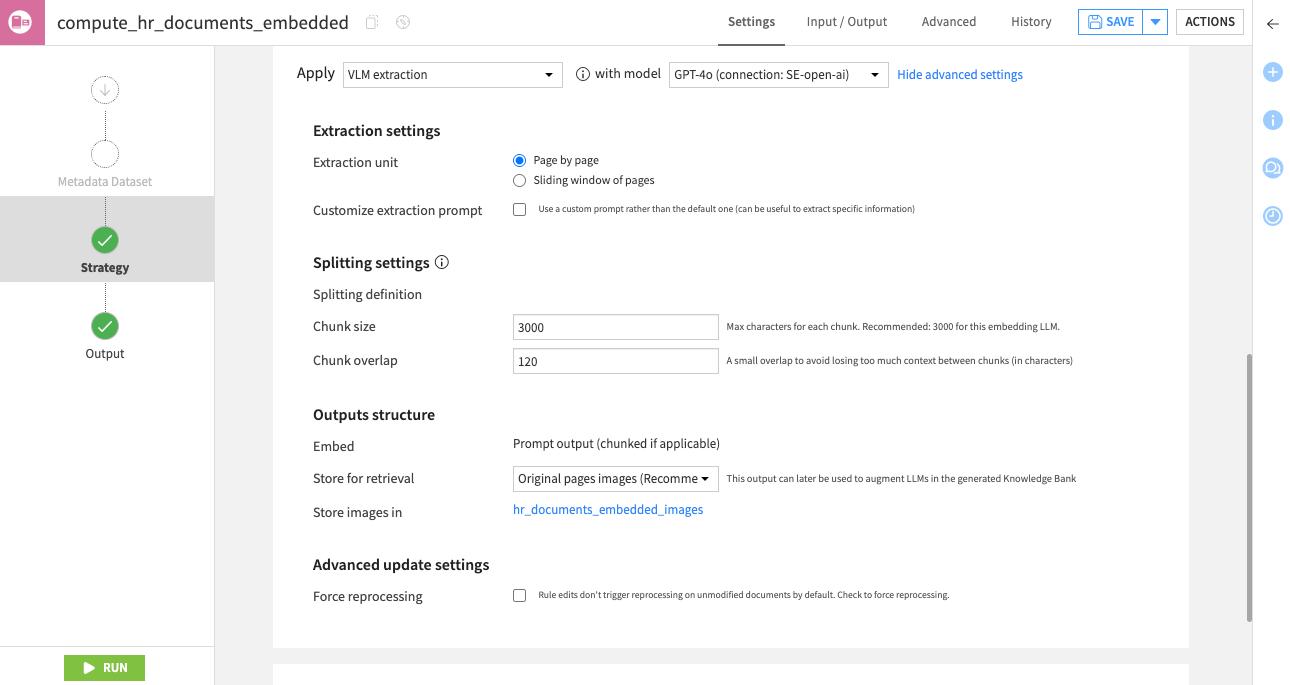

Depending on the documents being parsed and the LLM being used, users now have a variety of options within the Embed Documents Recipe to perform extraction. Advanced settings exist to handle features such as the extraction unit, chunk size, and overlap. These levers are helpful to gather proper context from documents.

True to Dataiku’s open concept for the LLM Mesh, users can leverage the LLMs, embedding models, and vector stores they have access to in the platform. Importantly, these are all clicks and drop-down menus, making it accessible to anyone who is tasked with building reliable chatbots or knowledge assistants downstream.

Testing RAG Queries

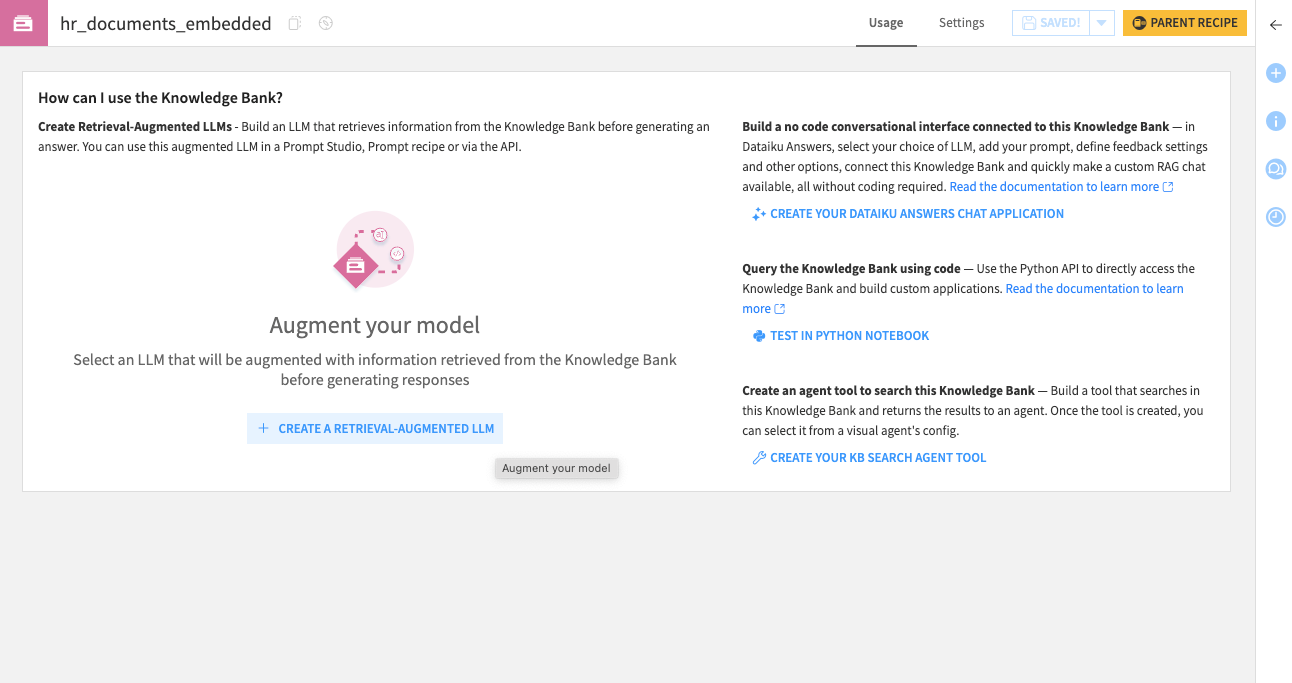

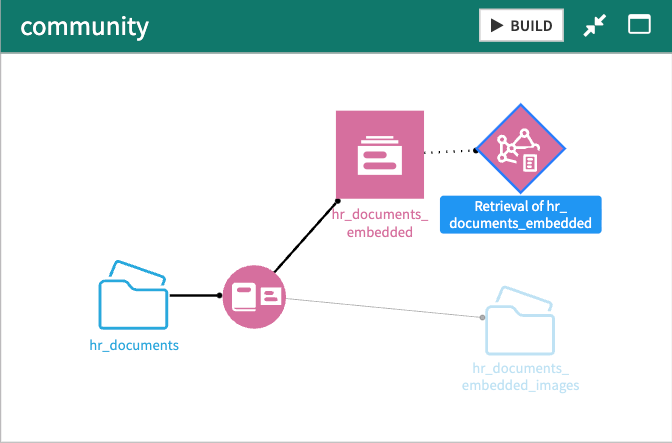

Once we have the Knowledge Bank (vector store) created, Dataiku provides some suggestions for how to leverage it. We’ll focus on creating a Retrieval-Augmented LLM, but notice that users can go directly to a Dataiku Answers Chat Application, Test in Python Notebook via API, or create a KB Search Agent Tool to use with AI Agents.

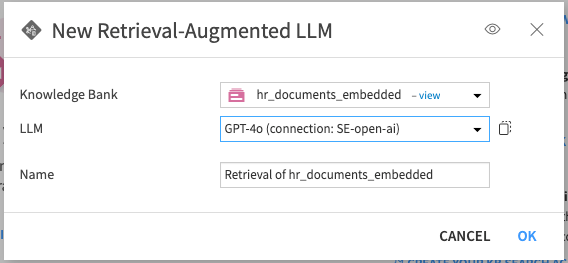

All that we need to specify for the Retrieval-Augmented LLM is which LLM to pair with and give it a name.

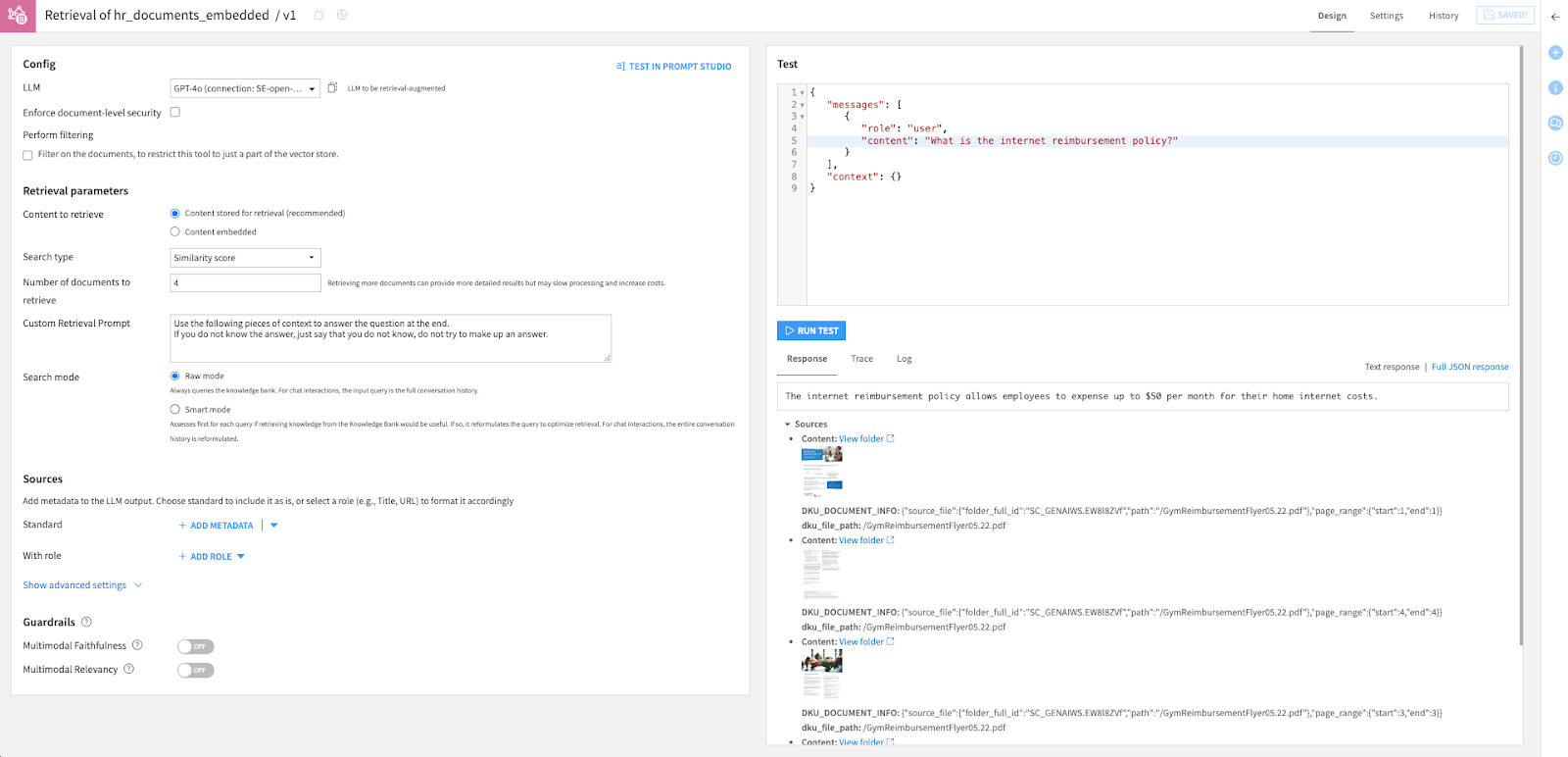

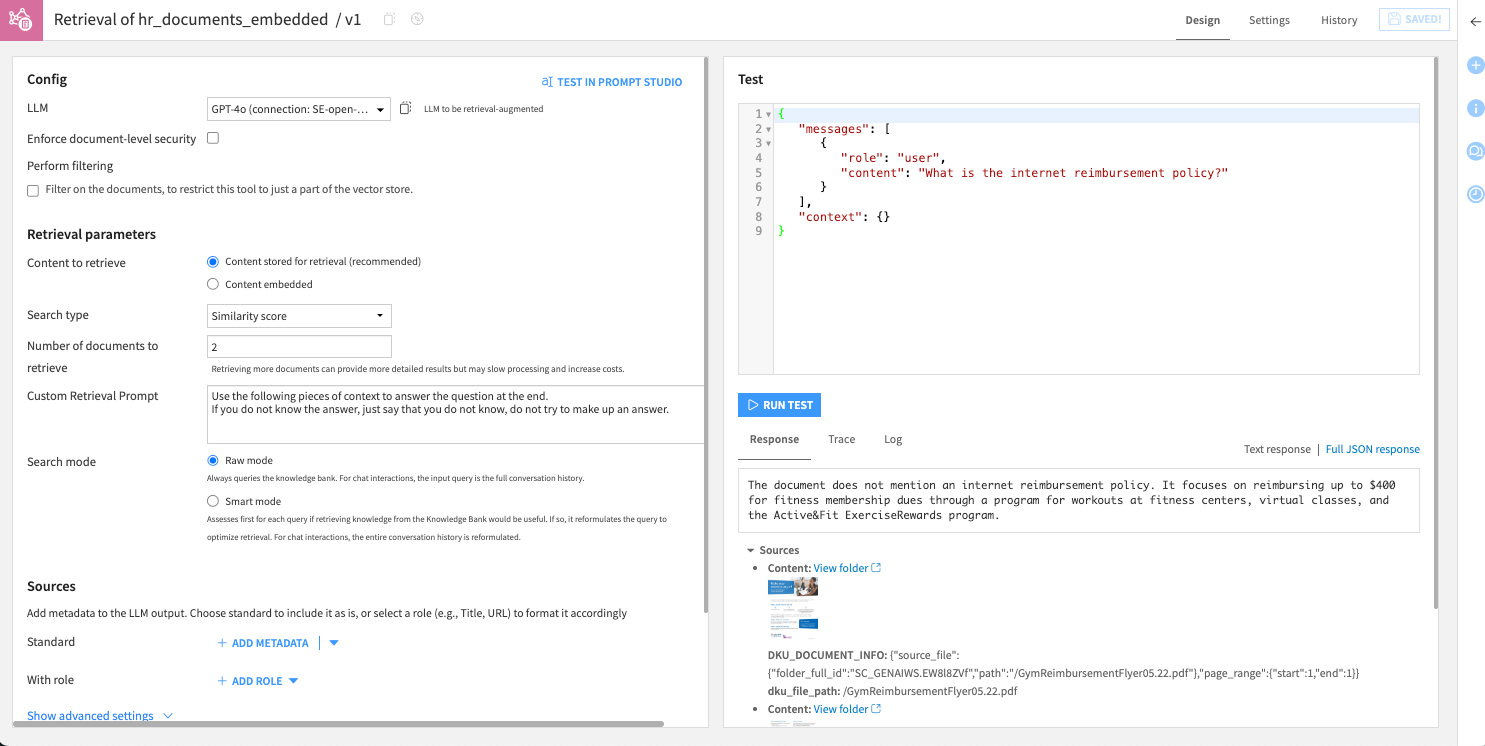

From here, we can start to test queries. In this example, we take HR-related PDFs and are trying to determine if the company supports internet reimbursement for remote employees. Based on the default parameters of 4 documents to retrieve based on the Similarity Score, it returns an accurate response with sources cited. While it may seem like we are ready to use this in a chatbot, it requires a closer look.

Unfortunately, the top 3 sources based on the Similarity Score actually didn’t have the right information to answer the question. Source 4 did, and therefore the LLM had enough to give the correct answer. However, requiring 4 sources seems like overkill for this simple question and answer use case.

This is impactful on a technical level because if we reduce it down to citing just 2 sources based on the Similarity Score, the LLM answers about a gym reimbursement instead, claiming to not know about the internet reimbursement policy. Technically, it’s not hallucinating; it just doesn’t have the knowledge because neither of the top 2 sources contains the information on the internet policy.

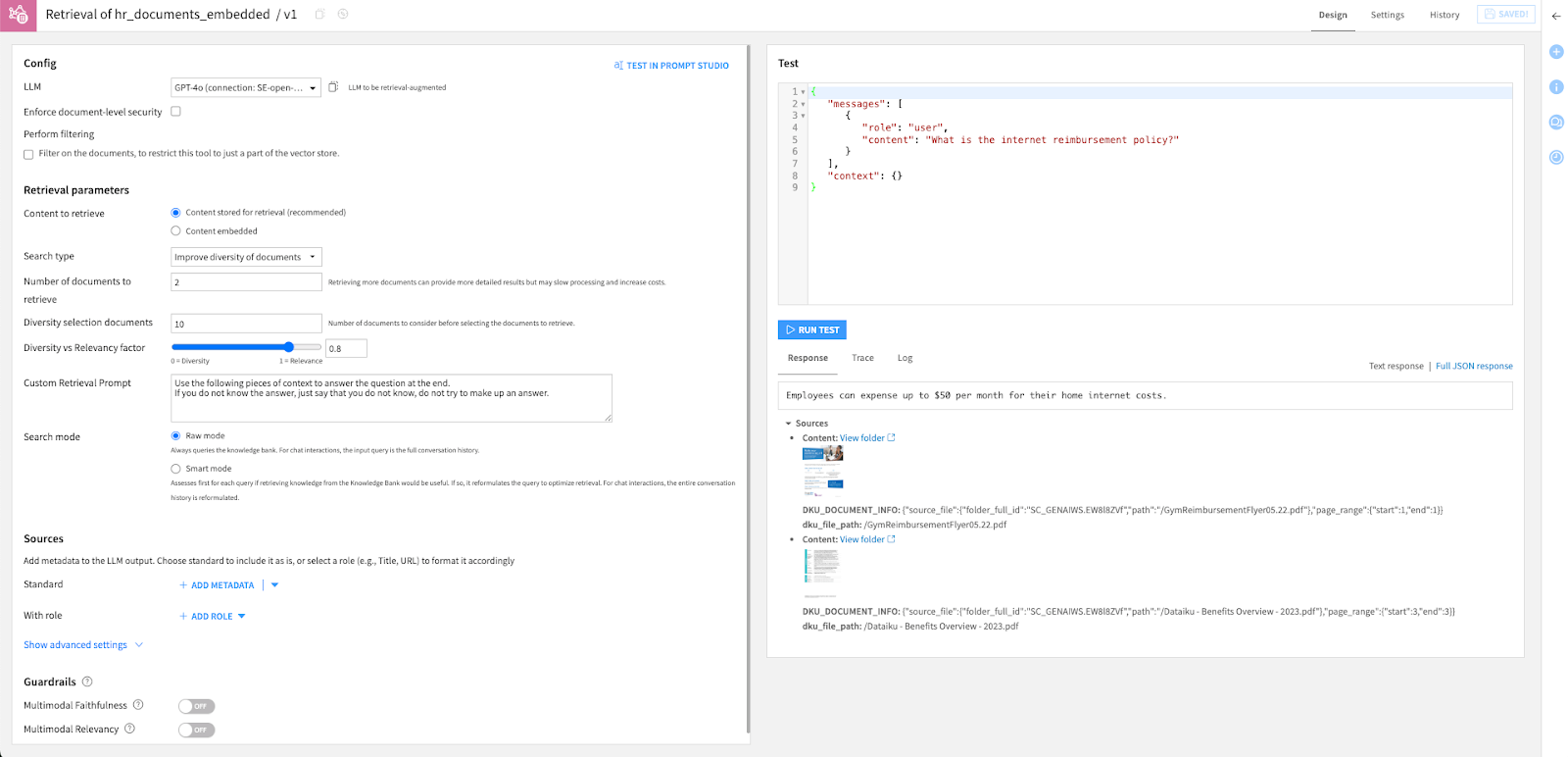

Thankfully, we can modify some of the other parameters to get back to the proper answer. Instead of the Similarity Score, we can toggle the search type to Improve diversity of documents. We will still retrieve the same number of documents (2), but can toggle the diversity vs relevancy factor on a larger number of candidate documents to end up with a more appropriate, pruned selection of sources. We now see the correct response being returned in the testing window.

Value Drivers

This rapid testing of the Retrieval-Augmented LLM is efficient for getting RAG use cases validated before putting an application around the API. It’s especially effective before configuring the native Dataiku chatbots to avoid frequent rebuilds when assessing the RAG model on live queries.

Ultimately, RAG represents a shift in how enterprises think about knowledge management. With RAG in Dataiku, enterprises test ideas faster and deploy assistants they can trust.

*Note that this post mostly covered unstructured files. If you want to ask questions of your tabular data, check out this community post on how to Chat Your Way to Data Insights with Dataiku Answers.