Using Dataiku

- Hi guys, I have a flow with 2 different scenarios. I have one variable v_idproduct used in a post filter join recipe in a sql code (id_product IN v_id_product). In each scenario I have a different lis…

- What functionality exists to show the relationship between flowzones. Say we have 42 flow zones with an average of 50 datasets each. Is there a way to summarize the relationships? I am interested in …Last answer by Turribeach

You can get the information you want using the Dataiku Python API:

https://developer.dataiku.com/latest/concepts-and-examples/flow.html#working-with-flow-zones

Last answer by TurribeachYou can get the information you want using the Dataiku Python API:

https://developer.dataiku.com/latest/concepts-and-examples/flow.html#working-with-flow-zones

- Appending to output dataset in python code recipes. Currently the way to do this is with a check box in the settings of the recipes that says "Append instead of overwrite". However- this is limiting a…Last answer byLast answer by raphaelro

Hi Turribeach, thanks again!

2a. I feel like you aren't hearing my issue here. I'm not asking why the schema tab exists, rather, why are the fields editable. I'm asking you why can you change the types manually if it doesn't do anything. Don't you see how that could be confusing for a user?

2b. I feel like you aren't hearing my issue here. I'm not asking for a workaround so that my NVARCHAR values are never cut off. I'm asking if it's possible to change to the default settings of NVARCHAR schema processing in SQL, since I think many people would agree that setting it to the max string length in the column is not very practical.

- Hello everyone, I am working on a Dash web app to create a chatbot using RAG. I have stored my HTML files in a Dataiku folder. When the LLM responds to the user, I display the sources of the documents…Last answer byLast answer by Turribeach

This page covers your needs:

https://developer.dataiku.com/latest/tutorials/webapps/common/resources/index.html

- Hi Team, Requesting your urgent attention to help us with the official documentation on spark vs dss engine with actual scenarios. Primarily we are looking when to use what. What are the recipe which …Last answer byLast answer by Turribeach

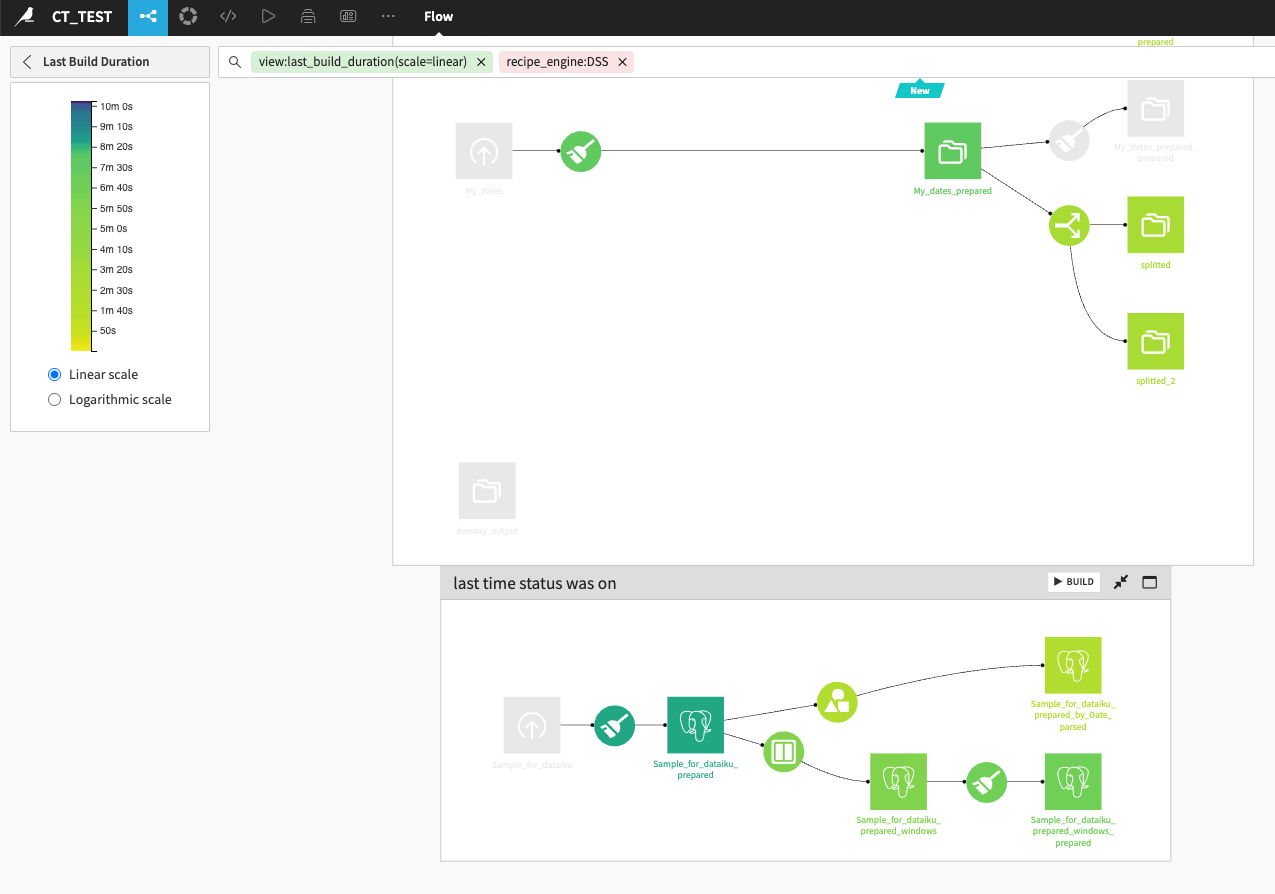

There is no magic logic to decide which compute engine will be better for each recipe. Generally speaking Spark should be better for larger datasets but it will depend on many other factors. If using a visual recipe sometimes the Spark translation is not very efficient and DSS engine is better. Sometimes custom Spark code can improve on what DSS does. There is also an overhead to using Spark on small datasets so it might not be worth doing so. The best approach to determine the best engine is to run on both engines and see which one is the fastest. You can multi-select recipes in the flow holding the Ctrl key and change the engine for all of them. You can also use the Recipe Engines flow view to see where each recipe is running. and in v13 you can even combine these two views at the same time to get something like this which shows me the last build duration for all DSS Engine recipes:

So if you were to flip all these to Spark, re-run and then compare the two views and figure which one runs faster than the other.

- I would like to know which data is used in a flow. For example, my input dataset contains 10 data but only 4 are needed for calculations; I want to be able to identify the 6 "useless" data. Do you hav…Last answer byLast answer by Turribeach

In v12 there isn't much you can do. But v13 has a new Column-level Data Lineage so another good reason to upgrade:

https://doc.dataiku.com/dss/latest/data-catalog/data-lineage/index.html

- Hello Community, I am using the great expectations package in a dss project for my Data Quality checks. I have already installed it in my code env and using it in a python script for the time being. E…Last answer byLast answer by Mourad_Y

After trying everything, none of the parameters really work. The module still checks inside /home/dataiku/ directory and if you don't have access, you will still get an error.

This is a quick not-so-clean fix I made to get things done. You can put it in a function inside libraries to declutter your code.

I understand this is out of the scope of this community, I just hope this helps somebody.

#great-expectations==1.3.5 import pathlib import great_expectations as gx from great_expectations.data_context import AbstractDataContext YOUR_CONF_PATH = pathlib.Path("SET/YOUR/PATH/HERE…") # use a managed folder for example AbstractDataContext._ROOT_CONF_DIR = YOUR_CONF_PATH / ".great_expectations"

AbstractDataContext._ROOT_CONF_FILE = AbstractDataContext._ROOT_CONF_DIR / "great_expectations.conf" context = gx.get_context(mode="file") - Hello everyone, In my Dataiku Flow, I have a RAG setup that includes embeddings and prompts. I’d like to replicate this process—achieving the same results as in Prompt Studio—in a Dash web app. The go…Solution bySolution by Alexandru

Hi,

You can leverage headless API via Dash Webapp :

https://developer.dataiku.com/latest/tutorials/webapps/common/api/index.html

Use the KB and LLM mesh APIs

https://developer.dataiku.com/latest/concepts-and-examples/llm-mesh.html#using-knowledge-banks-as-langchain-retrievers

THanks - while i am migrating dev to val, getting the below error. canyou please provide me with how can i fix it. Importing archive... Traceback (most recent call last): File "/app/dss_install/dataiku-dss-13.…Last answer by

- Hello Everyone, I created a connection with my Azure SQL DB using the MS SQL Server connector, the connection went well, but when I clicked on get table list, I got the following error message: Oops: …Last answer byLast answer by Alexandru

Hi @samuel_acr_96 ,

If the issue still persists, can you please open support along with the instance diagnostics taken immediately after your tests? The logs may provide more information on the exact exception

Thanks

Top Tags

Trending Discussions

- Answered2

- Answered ✓7

Leaderboard

| Member | Points |

| Turribeach | 3702 |

| tgb417 | 2515 |

| Ignacio_Toledo | 1082 |