- Community

- »

- What's New

- »

Study: AI-Enabled Energy Management System

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Introduction

Energy management systems (EMS) aim to optimize energy production and consumption patterns to reduce overall consumption and emissions. Such systems have been conceptualized for industrial systems, such as drilling equipment and manufacturing centers. That said, they’re also a hot topic for systems that aim to utilize “spare” (in other words, cheap) energy to transform them into a storable form of energy, such as hydrogen or batteries, to be consumed when it is needed. Unfortunately, this is on the expensive side.

From a data scientist's perspective, this is a really fun topic to work on, but it usually remains at a conceptual stage and is only applied in real life on a geological time scale. So, how to work on this topic and still have an impact? As it turns out a complex system such as a drilling rig, shipping vessel, or any system with production plans & load plans, is similar, conceptually, to a home setup with solar panels.

System Description

For this study, our system will be: my home! Its components are the following:

| Source | Sink |

| Electricity Grid | Electricity Grid |

| Solar Panels (1.1kW) | Boiler (1.1kW) |

| UPS (1kWh) | UPS on Work from Home Station (Storage 1kWh, consumption 200W) |

The system is metered and controlled via connected switches.

Energy source component of the system: solar panels (1100W)

Problem Statement

As with any good project, let's start with a problem statement: In my energy system, my peak energy production doesn't match my peak energy consumption, resulting in wasted energy. What’s more, the peak consumption corresponds to times when the CO2 intensity of getting energy from the grid is the highest. Finally, the legal framework in which my system operates doesn't allow me to monetize any electricity injected back into the grid.

Energy consumption of my system (which is, again, my home!). The baseline consumption is around 75W. The consumption zero's when the solar panels produce more than the local consumption. The peak consumption corresponds to the water boiler.

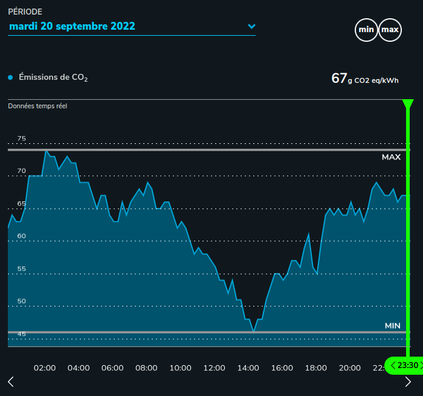

CO2 Intensity in CO2 g eq/kWh

Solution

I need to implement a system that stores energy and returns it when consuming it from the grid would have a higher carbon footprint.

The constraints are:

- No new equipment (Large batteries have a very bad carbon footprint).

- The business stakeholders will not accept a loss of comfort (which translates into a minimum of 2.5 hours per day of the water boiler being ON).

- Running the automation system should be energy efficient.

The two batteries available on the system are:

- A water boiler (transforming electricity into heat, to be delivered as hot water). A charge cycle, assuming a life of 15 years of the asset, is 0.03$.

- A UPS (storing up to 1kWh, however, a charge cycle costs about 0.34$ in asset depreciation, which is higher than the cost from the grid (0.17$/kWh).

Therefore, the optimization will focus on the boiler. Future optimization of the system could look into factoring in the heating system.

The EMS components are:

- One module that forecasts the CO2 intensity of the energy coming from the grid for the next day.

- One module that forecasts the solar panel production for the next day.

- One module that builds an hourly schedule showing the low CO2 intensity of the energy available in the overall system (grid and panels ).

- One module that allocates the 2.5 hours per day of the water boiler being ON and adapts the schedule based on real-time power production data.

Infrastructure

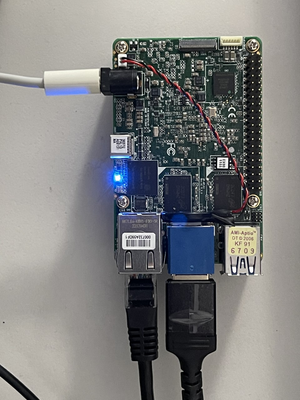

Local automation tasks are running on a UP4000 Board, continuously consuming about 12W.

Up 4000 Board, being used for local automation tasks (fetching wattmeters measurements, running orchestration tasks, and sending ON/OFF instructions to power outlets/lines)

Wattmeters and Outlets/Lines are being controlled by a Netio PowerPin.

CO2 Intensity Forecast

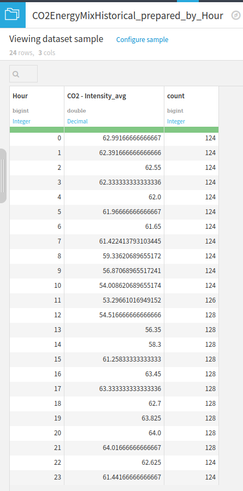

CO2 intensity data is available in real-time through API's. The data is highly cyclical, with a 24-hour period and seasonal variations due to activities such as the maintenance of nuclear power.

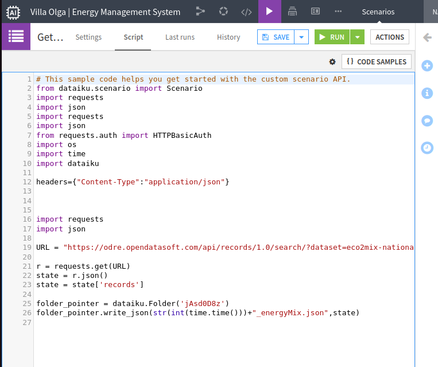

The data is retrieved via a Dataiku Scenario, that calls the API every 24 hours.

Dataiku Scenario, retrieving the data periodically

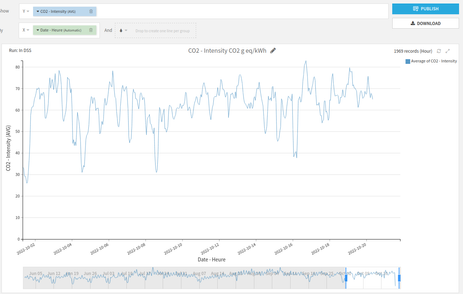

CO2 Intensity of electricity coming from the French grid

A complex forecast approach could have been used to forecast the time series (for example, using auto-arima methods ). However, due to the extremely simple nature of the forecast, the weekly average is being used to forecast the time series.

24h CO2 Intensity forecast (for October 22nd, 2022)

Solar Power Production Forecast

Solar power production forecast API are available, but not for free. What’s more, solar power production is also highly dependent on trees and construction that may cast a shadow on the solar panels. Therefore, it is un-economical to use those sources.

For this solar power production forecast, the data available are:

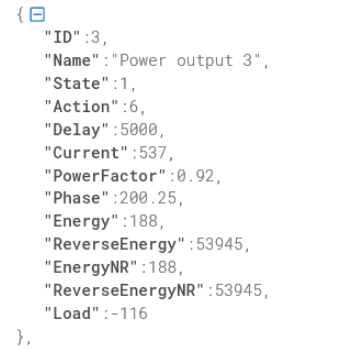

1. Minute-by-minute wattmeter information from the solar panels (being queried using a cronjob on the up4000 board).

A Load of -116 means that the solar panel is injecting 116W into the system

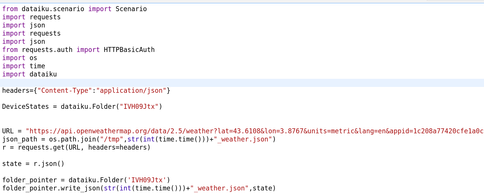

2. Hourly weather data, being queried from openweatherdata.org.

Weather data being queried

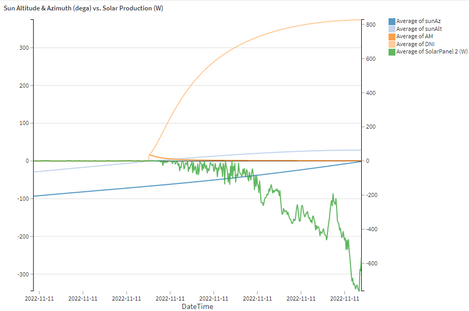

In addition to the weather data and power production measurements, specific features will be engineered: the sun azimuth and height, respective to the solar panels.

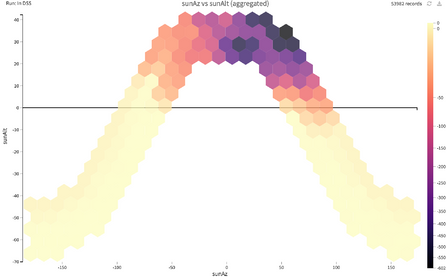

Sun altitude (dega) and azimuth (dega) vs Solar power (W) as a time series representation

Average Solar Power (W), aggregated by Sun Altitude (dega) and Azimuth (dega) on days were the weather code corresponds to sunny days.

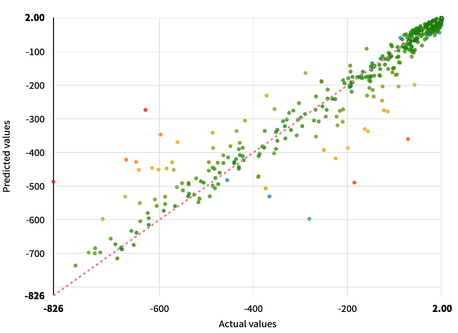

A simple regression model is then used to predict solar power generation from features such as the sun altitude, sun azimuth, weather forecast symbol, and cloud coverage. The model is based on four months of data, which doesn't cover all of the possible weather forecast codes nor sun altitude or azimuth. Therefore, a simple regression model is more appropriate as it will be able to extrapolate better away from the training data.

A continuous model retraining/deployment is put in place to update the model weekly as the training data starts to cover new months, weather codes, and changes in vegetation impacting solar power production.

Predicted solar power production value, from weather forecast data

Allocating Consumption at the Lowest Carbon Intensity Hours

On sunny days, the lowest carbon intensity hours are when the solar panels are producing, and on rainy days — rare in Montpellier — when the CO2 intensity of the grid is low.

Based on this logic, the system allocates 3 hours of boiler ON, on the most CO2 efficient time slots and adapts in real-time when the cloud coverage doesn't match the weather forecast predictions.

The instructions for the system are stored in Dataiku datasets. There are two sets of instructions:

- The daily forecast instructions, which provide an hourly schedule aimed at minimizing the carbon footprint of the system. The daily instructions are being retrieved daily and sent to the connected outlets.

- Realtime instructions to automatically adjust the schedule so that when solar power production is above or below expectations, the instructions are retrieved every 5 minutes and translated into an instruction sent to the outlet/line.

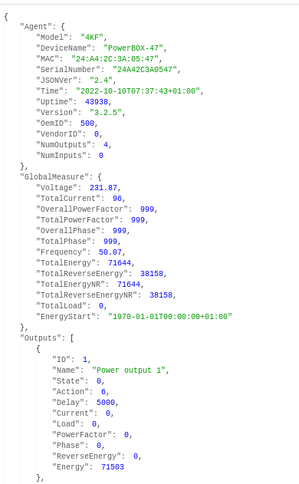

The state "0" is sent to the Power Output, whose ID is 1 (The boiler), in order to switch off the boiler

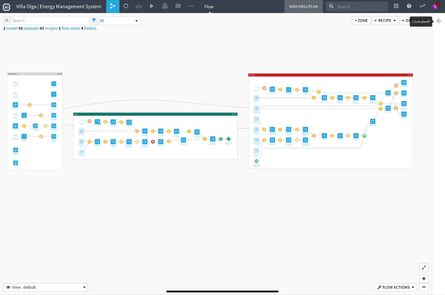

The overall flow looks like the following:

The flow is composed of multiple scenarios that are retrieving the data from the sources (e.g., wattmeters, weather data, weather forecast data, co2 intensity ), training a model to forecast solar power production from weather forecast data, and finally, sending instructions to the elements of the system.

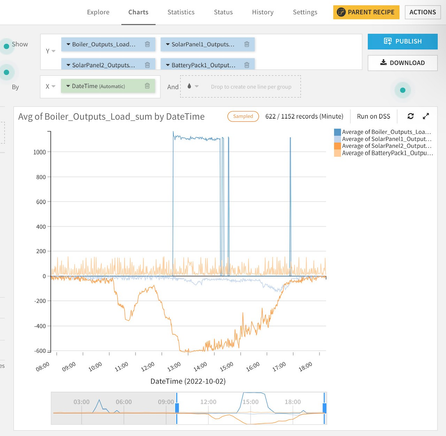

Time series representation of the consumption of the metered elements of the system (boiler, solar panels, work-from-home setup, and UPS)

Impact

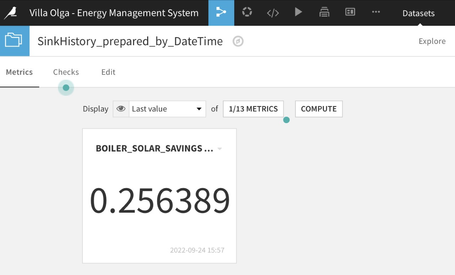

On a sunny day, by reallocating the consumption to optimum times, the carbon footprint and energy consumed from the grid are reduced by about 25% compared to the footprint that would occur with the same system (solar panels, boiler ). The savings are computed daily using a custom Dataiku metric:

Boiler daily carbon footprint reduction (25%), as a custom Dataiku dataset metric

Personal Statement

Is this over-engineered? Absolutely. Similar results could be achieved with a timer, but it would require fiddling with the time settings depending on the weather or the grid's CO2 intensity, which varies on a daily basis.

Additionally, a number of sources are providing forecast information about solar intensity. That said, the pricing of those sources exceeds to potential savings that one may expect on an individual home.

The main value of this EMS is that it shows that Dataiku can be used efficiently as an EMS, and that its flexibility allows the introduction of multiple sources of data. The ability to deploy the EMS onto board-like infrastructure makes it suitable for deployments into multiple locations.

Only members of the Community can comment.

-

Academy

46 -

Announcements

104 -

Business Solutions

7 -

Community Release

33 -

Customer Stories

23 -

Dataiku Inspiration

3 -

Dataiku Neurons

8 -

New Features

28 -

Product

36 -

Top Contributors

45 -

User Highlights

3