- Community

- »

- What's New

- »

From NLP to OMG: Announcing Powerful New Generative AI Features in Dataiku

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

When I think about the possibility of Generative AI rendering jobs obsolete, it tickles me pink to think that my former job prior to joining Dataiku is already one of them! Well, perhaps not my whole job, but definitely the parts where I demonstrated how text analytics software could help companies tackle traditional NLP tasks like topic modeling, content categorization, sentiment analysis, entity extraction, and so on. Much to my chagrin, all of these tasks — which required significant time and technical expertise to get right — can now be performed with minimal time and effort (and NO knowledge of computational linguistics), thanks to large language models (LLMs).

Companies and individuals all over the world are scrambling to experiment with the most cutting-edge LLMs from multiple providers and capitalize on the opportunities they unlock. Good news! In Dataiku, we’re making it easier than ever to build and deploy LLM-powered applications that are safe, secure, and scalable. Read on to learn more about new Generative AI platform capabilities just released in Dataiku that help you go faster, create new types of value, and develop simple to sophisticated LLM applications that will change the way people do their work.

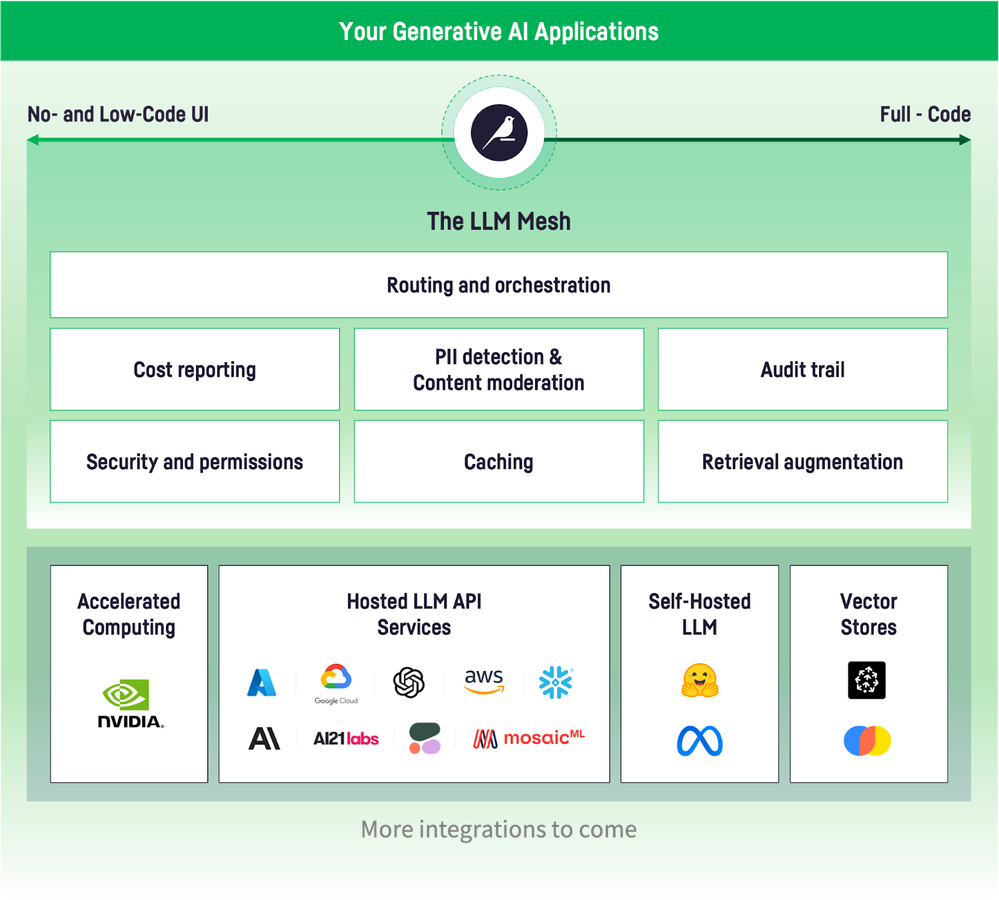

LLM Mesh

First off, you may have heard of Dataiku’s LLM Mesh, which we announced at our Everyday AI conference in New York at the end of September. While the different types of applications you can build with Generative AI are nearly limitless, there are a lot of components and requirements that they all have in common under the hood. Think of the LLM Mesh as a set of specialized features in Dataiku that serve as a technical backbone — a layer containing common functionality and controls that enable your team to efficiently build enterprise-grade LLM applications while addressing IT concerns related to cost management, compliance, and technological dependencies.

To learn more about the LLM Mesh, check out this video by Jed Dougherty, Dataiku’s VP of Platform Strategy.

The LLM Mesh contains a secure API gateway that enables platform admins to centrally configure connections and permissions to AI models and services and then generalize access in a controlled way.

It also provides many components that help to manage access and security, data privacy, content monitoring, cost reporting, and so on. When your infrastructure and AI services of record change, as they certainly will over time, not having all your LLM applications hardcoded to specific services will allow you the agility and flexibility you need to keep current in this fast-changing space.

Prompt Studios

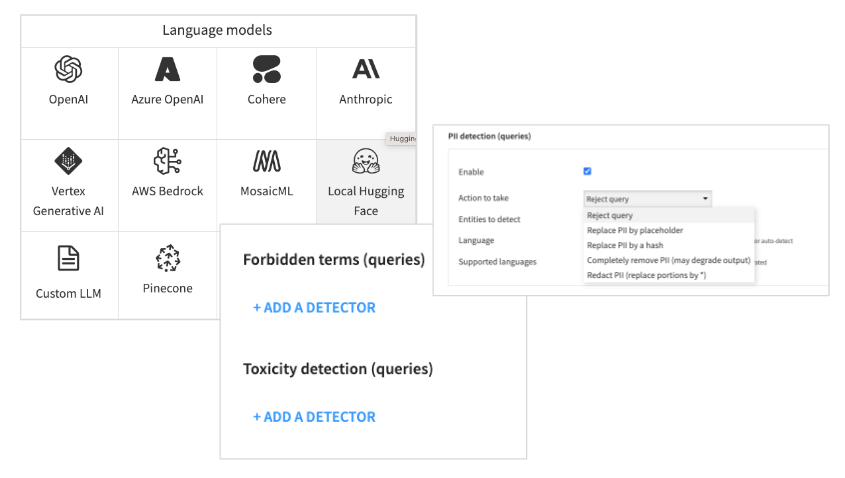

For any Generative AI task, engineering a high-quality prompt is the key to success. Prompt Studios in Dataiku allow you to design, test, and operationalize enterprise-grade LLM prompts. In the dedicated Prompt Studios interface, rapidly iterate on LLM prompts and validate them on real data, provide examples for few-shot learning, and compare both results and cost estimates across different LLM providers and models.

Watch a short demo of Prompt Studios.

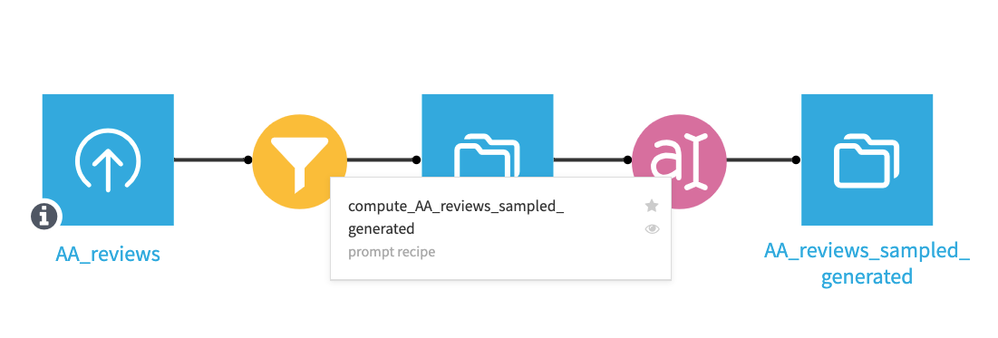

Once satisfied with your single-shot prompt or prompt template, the associated visual recipe operationalizes your prompt into your data pipeline as an object in the Flow. Remember: Thanks to the LLM Mesh, you can always choose to swap out the model or provider later with minimum disruption to the overall pipeline.

A prompt recipe in a Dataiku Flow

Retrieval Augmented Generation (RAG)

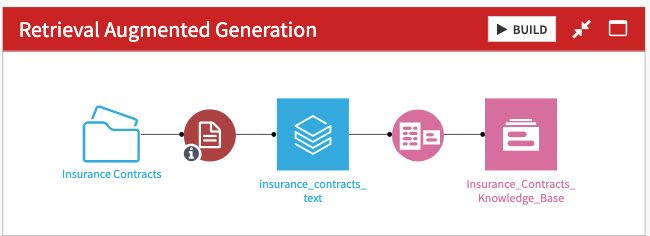

Chatbots powered by generic LLMs can save time for common queries, but are unable to access recent data or critical internal documentation and so may miss out on key details. What if you want to take advantage of these powerful foundational LLMs, but also customize them for your specialized task or provide them with information they weren’t originally trained on? By applying Retrieval Augmented Generation (RAG) and semantic search techniques in Dataiku, you can augment foundational LLMs with your own knowledge base to ensure chatbots provide the most relevant, accurate, and trustworthy information possible.

Watch a short demo of RAG components in Dataiku.

With Dataiku’s built-in components for RAG, you can easily transform your organization’s internal documents into a knowledge bank (as text embeddings efficiently housed in a vector store), apply semantic search to retrieve the most relevant chunks of info for an end-user’s query and include them in the request to the the LLM, thus informing the chatbot application with your own sources of proprietary or domain knowledge.

Visual components in Dataiku help you augment foundational LLMs with your internal knowledge

Native NLP Recipes

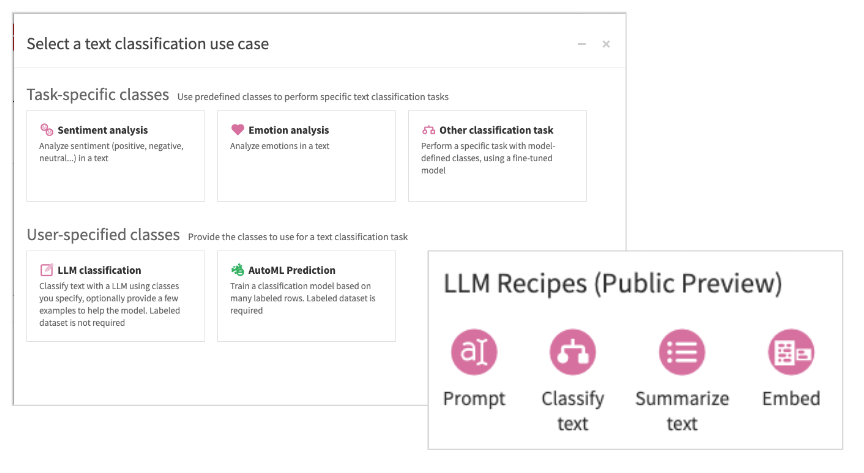

Finally, let’s cover the last major category of new Gen AI features available for public preview in Dataiku 12.3: the new built-in NLP recipes that oh-so-casually replace all my hard-won text analytics and linguistic rule-writing skills! These LLM-enhanced, visual recipes for traditional NLP tasks such as text classification, sentiment and emotion analysis, and summarization are a fast way to get ROI from Gen AI, simply by modernizing legacy NLP projects.

These recipes can process text in multiple languages, efficiently handle even lengthy texts by intelligently chunking them up into manageable sections, and provide easy access to both generic LLMs and pre-trained models that are specifically designed for a specialized task.

Watch a short demo of native, LLM-enhanced NLP recipes in Dataiku

In addition to these new LLM-powered recipes, don’t forget Dataiku also has plugins containing visual recipes for text extraction and OCR, named entity recognition, translation, and other common NLP tasks.

Learn More About the New Gen AI Features

Check out Dataiku’s dedicated Gen AI webpage to learn more about these exciting product additions, and as always, visit the official Release Notes to get more technical details and reference documentation. I encourage you to try out these new feature updates for yourself, and to let us know what you think in the comments!

All caught up on the release? Why not dive into the recordings from our recent conference Everyday AI NYC!

Only members of the Community can comment.

-

Academy

46 -

Announcements

104 -

Business Solutions

7 -

Community Release

33 -

Customer Stories

23 -

Dataiku Inspiration

3 -

Dataiku Neurons

8 -

New Features

28 -

Product

36 -

Top Contributors

45 -

User Highlights

3