Sign up to take part

Registered users can ask their own questions, contribute to discussions, and be part of the Community!

Registered users can ask their own questions, contribute to discussions, and be part of the Community!

Hello,

I have a vba code that does the following:

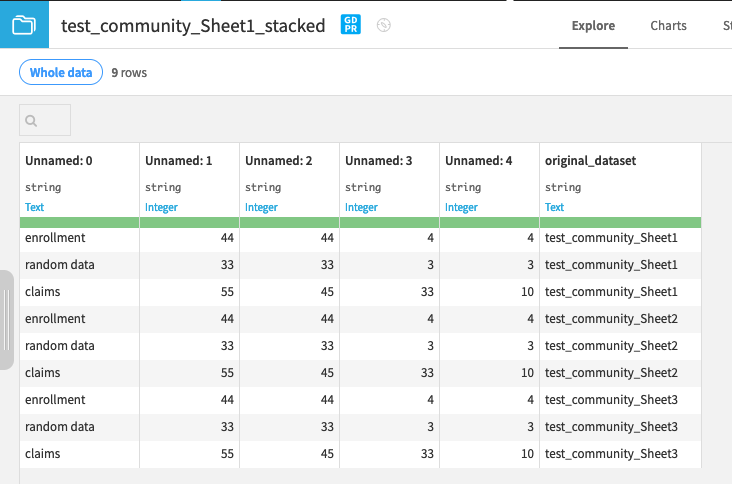

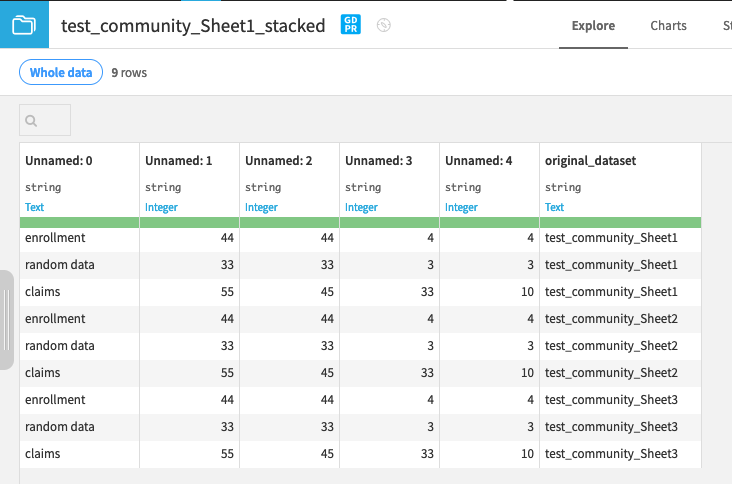

- opens 41 excel files. Each of these files has 4 to 5 tabs with information like incurred claims, paid claims, enrollment data in different rows.

- it then pulls all of this information in one single excel sheet. it categorizes the information based on the name of the file.

How could i do something like this on dataiku? Any help would be appreciated.

Operating system used: Version: 12.2.1

Hi,

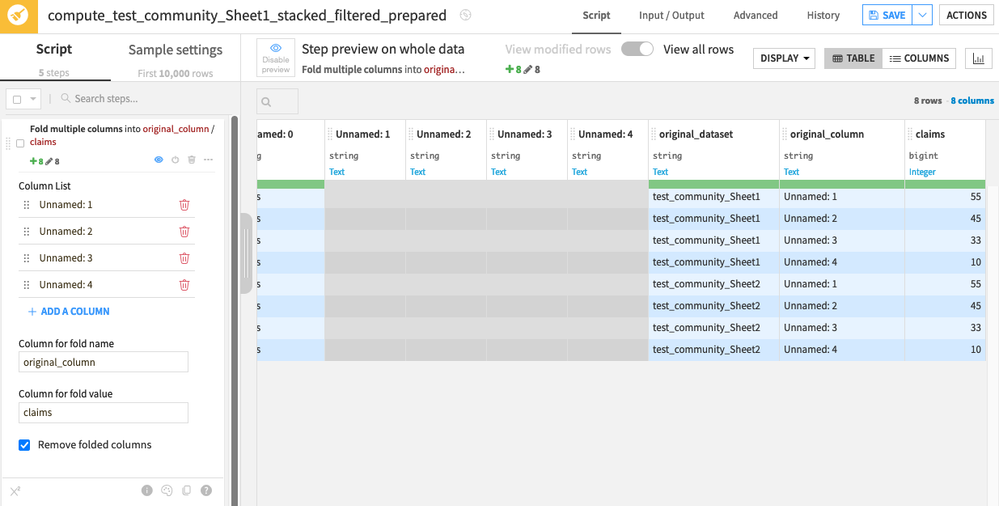

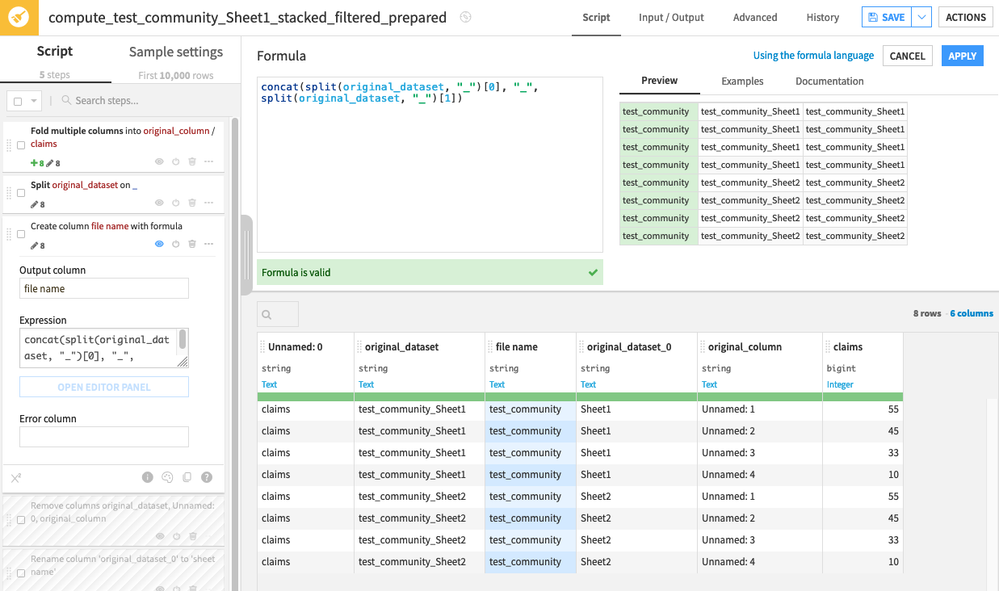

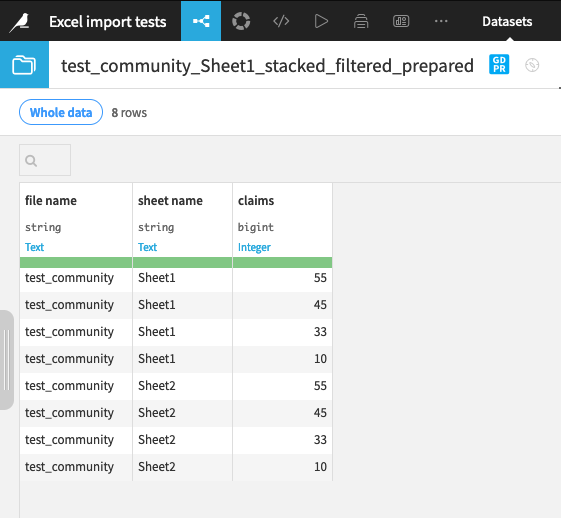

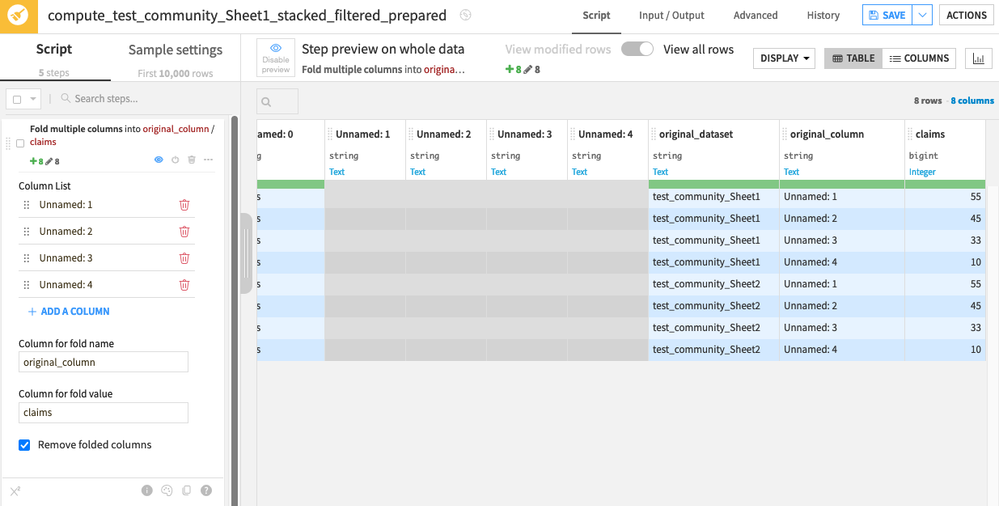

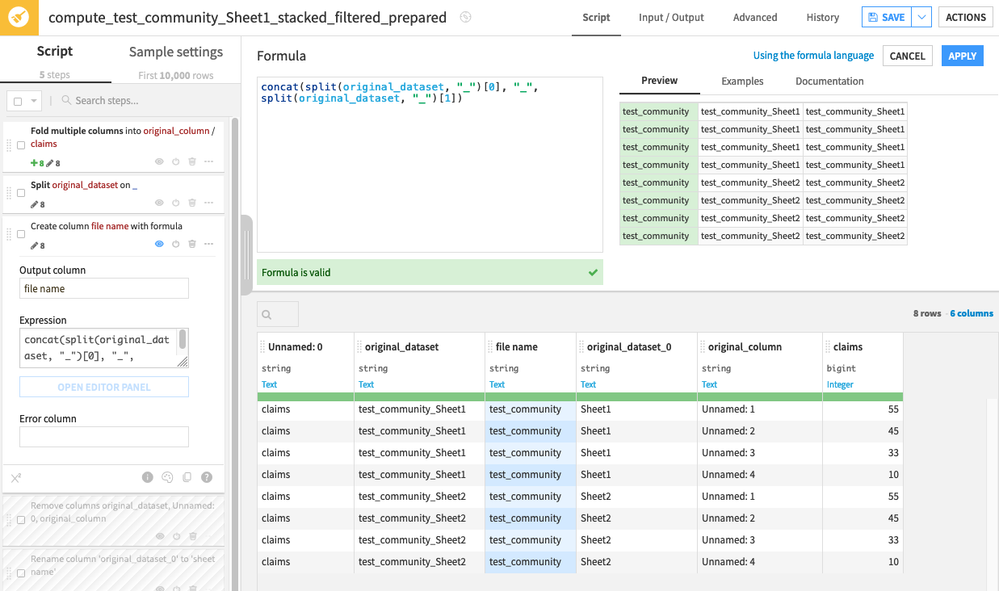

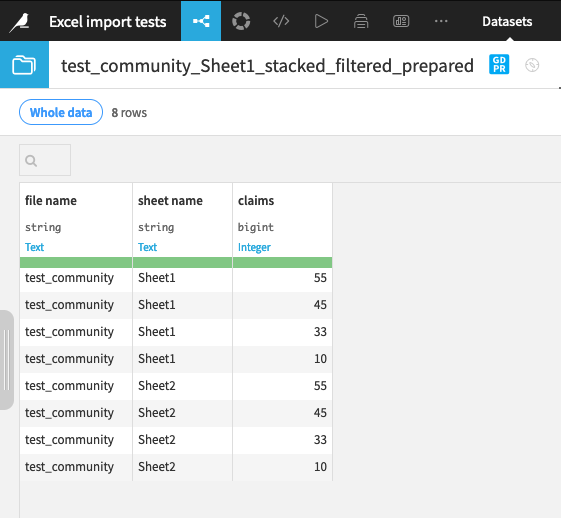

Thanks for the examples, it makes things much clearer. I managed to replicate your use case (using the excel sheet importer, if you created a single dataset from the folder, you can skip the stack recipe) and the final flow looks like this:

To go more into details we have:

For a start I would move away from Excel as your data sources. The data those Excel files have must sure be coming from a proper database so you should look to source the data from those data sources and load it up on in a Dataiku dataset. If you still need to use the Excel files have a look at the Files in Folder dataset.

Hi,

First of all, you won't be able to execute VBA in DSS, so you'll have to re-implement your code's logic in a DSS flow. Starting by importing all the excel files as datasets.

In order to help you, we might need more information:

Finally, @Turribeach's piece of advice is a valid one. If your excel files are exports from a data source, you might want to connect directly to this source, as you'll be able to directly pull the data when it changes, and automating the flow will be easier

Thanks for the quick response, Alexandre! Here is some information about the input files and what I'm trying to do:

- Directly pulling from the data source would be hard due to admin restrictions but I don't mind uploading all the 41 files into a folder on Dataiku project.

- All the input Excel files have the same format, same sheet names, but different file names.

- The input files may not have the same number of sheets/tabs in them..

Here are the steps for one input excel file right now:

- I want to pull one particular row (example row 56th) from a sheet in that input file and paste it as a column in the new file. I want to repeat this process for all the sheets in the input file (except one particular sheet named "total") and paste the new data below the previously pasted data. I want the sheet name and the file name in two separate columns to categorize the data. My output essentially should now have only three columns - sheet name, file name, and claim amounts. the data pulled from all the sheets/tabs in that one input file should be stacked on top of each other. They will be categorized by the sheet name and file name. I have attached images of the input and output files (these are examples) so it helps to visualize my ask, better.

- Now, I want to repeat this process for all the 41 input files. I want to go into each file, pull that particular row (it is the same row number in each file thankfully), pull the sheet name, file name, and paste the data in one single file.

I don't know if there is a recipe i can write or already a recipe that can help do this whole process. Your help is very much appreciated!

Hi,

Thanks for the examples, it makes things much clearer. I managed to replicate your use case (using the excel sheet importer, if you created a single dataset from the folder, you can skip the stack recipe) and the final flow looks like this:

To go more into details we have: