Sign up to take part

Registered users can ask their own questions, contribute to discussions, and be part of the Community!

Registered users can ask their own questions, contribute to discussions, and be part of the Community!

delicious challenge! i'm going to try to create a model without 'industry' or 'hours worked per week'. does this mean 'economic activity' is out as well? i could probably use the 'easy' model to see how well the following impacted the target: age, social grade, health, family composition.

Hello! The modeling goes well! However, so far, I can't seem to build a model that isn't 1.00 or close to 1.00. I think I'll try removing all of the obvious features like "student" and "hours worked" and "economic activity" and see what I get when using just the demographics.

Have you made any further progress?

See the conversation below. We have discovered that.

--Tom

Tom - thanks for the tip! i forgot to check that part!

Thanks for the new dataset to dig into.

I note the varibles in the data set are almost all encoded as numerical values. However, when looking at the data dictionary these values are not numerical in nature they are actually catagorical in nature, including age. Where the value 1 = 0-15 years (or a 16 year period) and 2 = 16 - 24 and 8 years period. The standard Visual ML setup treats all of these values as if they were numeric not catagorical in nature. So, I'm going to think about how I'll deal with the Model Setup or some type of re-coding of variables.

Also the amount of data here keeps my 6 year old laptop busy for 15 minutes to compute all of the data. I'll have to look at some better sampeling techniques. Starting with the first n records seems to be potentially problematic.

@taraku good point - I'll add that to the list for hard mode.

@tgb417 Glad your enjoying it!

Nice spot - I thought that particular little wrinkle would provide some interesting challenges.

And yes I wouldn't advice first n records - I don't know that the data is in any particular order but it wouldn't pay to assume it wasn't!

How are others doing with the Conundrum? It seems like a dataset for which one can build a fairly high-quality model. Currently, I'm getting results in the ROC AUC ~= .95 .

I would enjoy hearing from others about what they are seeing and some of the approaches under exploration.

Wow, that's one great result, how did you achieve that? That'd be an interesting case to look at.

In visual Analytics the Random Forest, Gradient Boosted Tree, XGBoost, and Artificial Neural Network all seem to like this data. Once I realized that most of the data is categorical even though it is represented in a number format.

With the defaults in DSS. These are all treated as numbers. In that case, I'm only getting ROC AUC down in the ~= .917 to .928. So choosing the defaults does not get one to more useful answers. Moving to Categorical for feature handling helps.

For example, here are the results of one of my runs.

That said, I'm still concerned about overfitting and information leakage. Many of the features have the -9 Catagory. "No Code required (Students or schoolchildren living away during term-time)"

The N/As above that are showing up as important features seem like a bad idea to me because of course most of this group is not working. What do you think?

--Tom

Hi @tgb417

Yeach, I had the similar problem with yours, though mine was even a bit overwhelming, first attempt of the recipes flow went off like this.

- Using the "Extra Trees Algorithm", managed to get 0.977 as R2 Score.

- And of course, changed the variables type to categorical.

- And here's the screen capture for the algorithm part.

While here's for the second attempt:

- Got a 1.00 as R2 Score.

- As an optimization, I did couple of tweaking in columns variables and turned some of them into a boolean format.

- It turned out, I have an easier to navigate and understand Decision Tree output.

- While here's the cost matrix.

- And here's how the finalized process would take place, with "2" being referred as "Employed" and "3" as "Unemployed".

It was fun doing this dataset, really enjoyed it! 😊

Cheers,

GLN

Have you tried the more realistic variant of the problem where you leave out (turn off) the variable ‘hours worked per week', 'economic activity', and 'industry' as suggested by @MichaelG. in the conundrum.

In re reviewing the challenge and my notes I’ve discovered that I have not fully met those requirements either. In the version I showed I notice that I’ve left on ‘industry’. That may be the reason I’m getting such high results. I’m “leaking in” the answer to the prediction of the target variable. In my case, one is unlikely to have an industry if one is not working. Therefore by leaving industry turned on the work status is leaking in to my model, through this Highly correlated data. This is not so much a prediction about work status, but the ability of the model to recognize that in this dataset if an industry exists then the person is working. I’m going back and reworking my model. This same sort of leakage occurs with the other variables being recommended for exclusion.

In addition I’ve noticed that you have just made the sex variable into a categorical variable. Some of the other variables on the list are also not actually representing numerical range. A feature like age is a range. There really are lower values of age and higher values of age, and that has meaning. I know that the feature is a value because I can answer the question is one age less than greater or equal to another. But, I’m not clear that I can make the same kind of less than greater or equal assertion about say religion or ethnicity. I’d like to invite you to consider what other features listed in our data set are actually numerical ranges and which are actually categorical values hidden in a numerical representation.

have fun. Thanks so much for sharing.

Hi Tom @tgb417,

Yeap that was so much fun in doing it, and so true about the categorical/numerical analysis, so here goes my third attempt on this. I waived out ‘hours worked per week', 'economic activity', and 'industry' and relied only on the following features only, "Family Composition", "Sex", "Age", "Marital Status" and "Country of Birth".

- And let it run on Random Forest, as per the following:

- And for sure, seeing a significant drop for the ROC score down to 0.811.

But it still decent enough, and had a good time running them, thanks for the inputs Tom 😊.

-- Cheers

I pulled out 'industry' from my latest model run. As the scores seem unchanged still in the ~= .95 ROC AUC.

For example after re-mapping age categories. I was able to produce a sensible partial dependence graph that looks like the following on the models I'm working on with this data. Age is usually the top feature for me. But never lower than top 5 feature.

True for the Target variable means that the person is working.

@gerryleonugrohoI'm surprised your performance metric was R2-score in the beginning as this kind of metrics is for regression only (thus not for classification). Have you checked that the prediction type was binary classification? 🙂

-Anita

Hi @anita-clmnt , actually I'm just following along with the rest of the thread post. Well aside from the fact, it is rather a fun, quick and dirty analysis. But on top of it all, it's a fun dataset to work with, and keeping you at home during this uncertain times. Certainly hope that you enjoy them too. 😊

Ps: Stays safe during this pandemic.

Cheers,

Based on this data dictionary, do you have any thought about how best to handle the -9 values in many of the columns with DSS.

"-9. No code required (Resident of a communal establishment,students or schoolchildren living away during term-time,

or a short-term resident)"This value seems to duplicate students and it's getting a lot of weight because it is leaking into so many features.

I sort of want to do one hot encoding for everything other than these -9 values. (At this point I've emptied out these values. But they keep showing up as high-value features.

I've re-coded the -9 as NA above.

Thoughts?

Hope you are well.

Those -9 values are not real missing values as they actually mean something. For example, for Occupation, it represents all the "people aged under 16, people who have never worked and students or schoolchildren living away during term-time". Because of that, getting models showing those categories as important is rather reassuring as it is clear that a large proportion of this category is currently not working. Therefore it helps us discriminate between the two types of individuals.

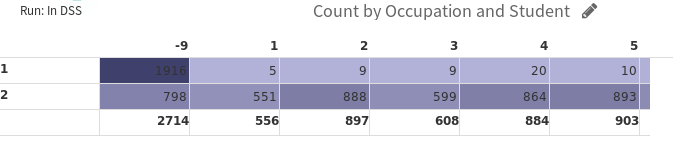

And actually Occupation=-9 doesn't duplicate 'Student' as the table below shows so I would keep them both!

One-hot encoding is a good idea! None of the variables has some kind of order between its categories so we can definitely use it!

I actually tried to run models with and without (without using Industry, Hours worked per week and Economic Activity of course). The unfold processor produces a sparse matrix which makes the model way faster to train but we have many categorical features so we end up with a large number of variables once all of them are one-hot encoded. I got very similar AUC scores: 0.967 without one-hot encoding and 0.966 with one-hot encoding (trained on only 75% of the data, I ran out of memory with the whole dataset) using XGBoost with a 3-fold cross-validation and some hyperparameter tuning on a subsample.

Do you know if it is written somewhere what encoding method is used by each algorithm in dss?

-Anita

@anita-clmnt Thanks for jumping into the conversation.

I agree that the -9 has a meaning. However, during earlier looks at the data dictionary. I had read these all as students. While students is common on all of the -9 it is not the only meaning. Now that I look at this with more care. I see that it is a mish-mash of meanings related to why the data takers are not going to record this data.

| -9. No code required (Resident of a communal establishment, students or schoolchildren living away during term-time, or a short-term resident) |

Most of those values are better described by other existing columns.

For example:

I'm wondering about doing one-hot encoding and then eliminating or combining some of the options. Thanks for the pointer to the unfold visual recipe step. I may give that a bit of a try.

I've been training a random sample of ~100,000 to 275,000 records on my 8GB on my 6-year-old laptop using gradient boosted trees. I've been getting a .965 AUC with 10-fold cross-validation.

I then tested the model of the remainder of the data that was never shown to the model and got results that were almost exactly the same.

When you ask about the encoding method are you asking about the feature handling of something else?

One of the ways I would look at this question myself is I'd take a look at the actual code DSS is producing for the model.

And then you can take the jupyter Notebook and tear it a part.

Thoughts.