Best Practice for managing tables

Hi all,

I'm using dataiku with snowflake as the storage. The dataiku flow is generating many intermediate tables and many of them are not useful, they just serve as intermediate table to generate the final result. But they will appear in my snowflake and I feel that they are messy. What are your best practices for managing such tables? And how do you guys manage tables between different projects?

Comments

-

Alexandru Dataiker, Dataiku DSS Core Designer, Dataiku DSS ML Practitioner, Dataiku DSS Adv Designer, Registered Posts: 1,384 Dataiker

Alexandru Dataiker, Dataiku DSS Core Designer, Dataiku DSS ML Practitioner, Dataiku DSS Adv Designer, Registered Posts: 1,384 DataikerHi,

There are several approaches here, the easiest is to enable SQL Pipelines in your project for Visual recipe,s especially this will mean intermediate datasets are not materialized by default: https://doc.dataiku.com/dss/latest/sql/pipelines/sql_pipelines.html

So you won't see the tables in Snowflake.

The other approach is to clear/drop intermediate datasets yourself via tags for example :

https://community.dataiku.com/discussion/19049/add-clear-dataset-data-by-tag-macro-similar-to-delete-datasets-by-tag

You can also use a different schema for intermediate datasets to prevent clutter of intermediate datasets.

Would not recommend using a different connection because this will reduce the computational efficiency of your flow :

https://knowledge.dataiku.com/latest/data-preparation/pipelines/concept-pipeline-optimization.html

Kind Regards, -

Turribeach Dataiku DSS Core Designer, Neuron, Dataiku DSS Adv Designer, Registered, Neuron 2023, Circle Member Posts: 2,626 Neuron

Turribeach Dataiku DSS Core Designer, Neuron, Dataiku DSS Adv Designer, Registered, Neuron 2023, Circle Member Posts: 2,626 Neuron@Alexandru suggestions are sound options but I feel you may be going against the flow here (pun intended!). Dataiku's strongest USP is that it can be used by "clickers" and "coders", meaning people that know Data Engineering / Machine Learning (aka Data Scientists) and those that don't. Having the flow persist every intermediate dataset supports that vision. It's easy to see the transformations visually when you can see what the output of each step is and breaking complex data pipelines in steps makes it much easier to understand. Visual recipes also provide a zero code approach that anyone can easily understand. If you use SQL Pipelines you are breaking that capability for little to no gain. The other thing you get by persisting the intermediate results is traveability and debug capability. Try to figure out the issue with a complex process with just the inputs and outputs is way more complex than debugging it step by step. So again you will remove this capability if don't have intermediate results.

At the end of the day storage is very cheap these days and virtually unlimited and available on demand, assuming you are on cloud (which you should). In most cases your bigger costs are people since the people that work on Dataiku projects will tend to be high value resources (Data Scientists, SMEs, BAs, Experts, PMs, Business Users). So making things more complex to understand and debug by saving some money on storage or because you think it looks messy doesn't seem like a reasonable trade off.

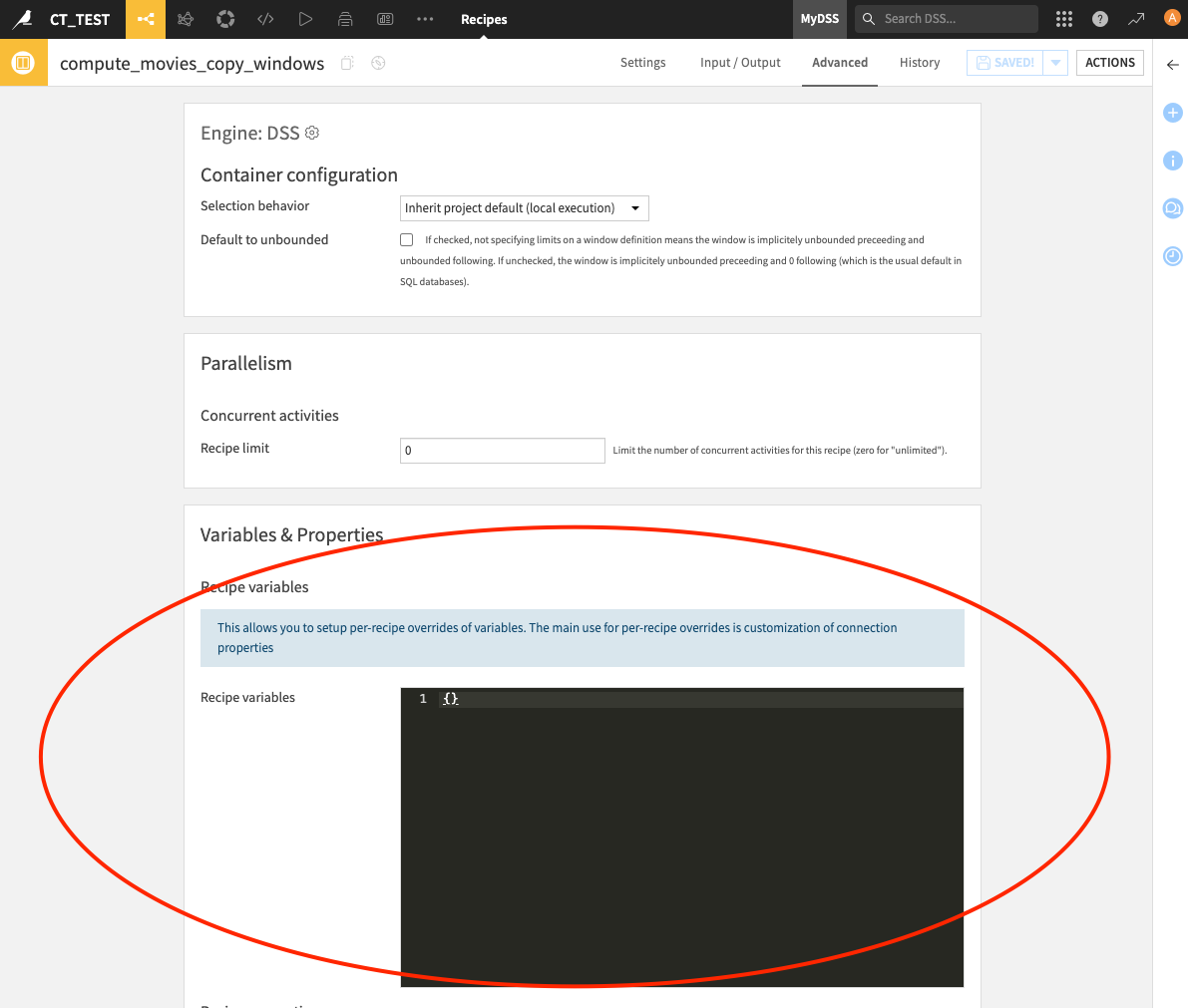

One thing you could do is to write output datasets to a separate Snowflake schema. That way you can separate intermediate tables from output tables and ignore the "noise". This could be done using a variable in the write schema in the connection which you can then customise in the recipe variables section. But you will have to maintain this manually so it's not silver bullet solution. -

@Alexandru & @Turribeach thanks for the answer, both are great insights for me