- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Galeria – Automatic Data Transfer Across Servers for Easier Accessibility

Name: Daniel Bindig, Data Engineer/Scientist

Country: Germany

Organization: Galeria

Galeria is Germany's largest, and Europe's second-largest department store chain.

Awards Categories:

- Best Acceleration Use Case

- Best Data Democratization Program

- Best Approach for Building Trust in AI

Business Challenge:

As a retail company, we must handle huge amounts of data. Especially moving data from one database to another can get arbitrarily complex. In our case, we needed to transfer data from an MS SQL Server database to a Snowflake database.

With our solution, we wanted to fulfill several requirements:

- Easy for everyone: Every team member should be encouraged to use the project in order to transfer data, especially those working with dbt and not familiar with Dataiku. Users of dbt should just have to modify a config table. Dataiku users should be able to do the job with only a few clicks.

- Standardization: Keep the used tech stack as small as possible. We have HVR in use, but no license for MS SQL Server and no plan to use more tools such as Informatica. In our team, resources are rather limited.

- Automatic manner: At best, a user does not have to look into the project when using it. This means data pipelines must be created and run automatically. The result is data in the target database.

- An arbitrary amount of data: Transfer an arbitrary number of datasets as fast as possible, even in parallel.

- Transfer in modes ‘Full’ and/or ‘Delta Load’: Data transfer must be performed either as full load (all data at once), or delta load (incrementally) in small pipelines.

- Stability: For stability reasons, it must be possible to easily adjust the transfer parameters, such as number of rows transferred in one step. If a transfer step breaks, already transferred data should not be lost.

- Take care of sensitive data/security: Do not fetch columns that contain sensitive data.

- Clearness: Even when transferring hundreds of datasets, the project structure in Dataiku (flow) should be as clean as possible.

- Monitoring: Data transfer must be monitored in order to intervene immediately if needed.

- Expandability: The workflow should be easy to expand, i.e., with small changes it should be usable for transfers from/onto other database types.

Business Solution:

We chose Dataiku since all requirements can be implemented in an automatic framework using the Dataiku API. This solution uses ‘code’ instead of ‘click’.

In Snowflake, we built a configuration dataset holding source information. Each line corresponds to a dataset in the source database having a:

- database schema

- table name

- technical topic

- ‘rebuild’ parameter (triggering data transfer)

- type of load (full / delta)

- set of two numbers steering the transfer velocity

- collection of columns to fetch. Sensitive data is just ‘blank’ and not treated anywhere.

Two Python recipes steer the data transfer:

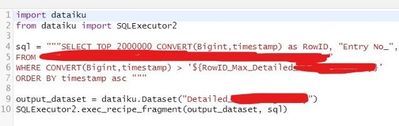

- In compute_subflow_setup configuration data is used. For each dataset marked for transfer (‘rebuild’ = Yes), the source dataset is imported (using the functionality of ‘project.init_tables_import()’), and a subsequent Python recipe is built (‘builder = project.new_recipe("Python")’). This newly created Python recipe contains a small SQL SELECT query composed of the number of rows to be transferred in one run, the needed columns, and a condition ensuring data is not fetched twice. SQL is executed via ‘SQLExecutor2.exec_recipe_fragment(output_dataset, sql)’. If for 100 datasets ‘rebuild’ is ‘Yes’, 100 small flows are created automatically (input, recipe, output) and grouped into flow zones according to their technical topic. In the case of ‘delta load’, the first 10 rows are transferred in order to have the condition in the SQL snippet usable.

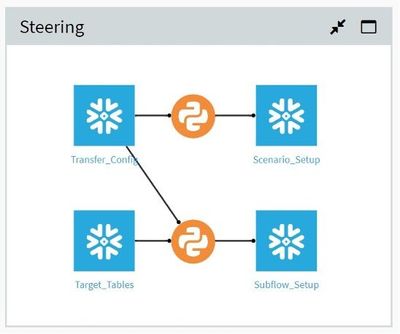

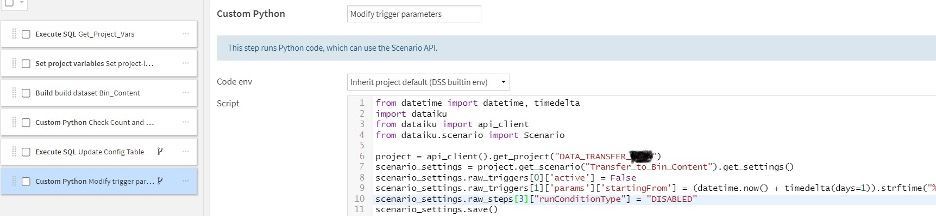

- In compute_scenario_setup a scenario is built for each subflow, each containing two triggers, a mail reporter, and 4-6 steps depending on load type. The first steps of each scenario perform the data transfer based on a custom trigger. The last two steps are conditional and run only once if the data was transferred entirely (full load) or the number of transferred rows exceeds a particular value (delta load). In these steps ‘rebuild’ is set to ‘No’, the first trigger is deactivated, and the second (time) trigger is activated, fetching new data once per day. For stability, the number of rows fetched is adjustable in the configuration. Furthermore, a random sleep time (2-3 minutes) is included in the custom trigger so that parallel scenario runs are somewhat separated.

An overall scenario executes the two Python recipes sequentially and triggers if ‘rebuild’ in the configuration changes. Thus, a dbt developer simply changes the configuration and waits for the transfer to finish.

The project was implemented by a Data Engineer with advice from an ETL developer using dbt, and supported by another Data Engineer, Data Scientist, and a working student.

Business Area Enhanced: Other - Retail

Use Case Stage: In Production

Value Generated:

Our team members do not have to care about how they get data from A to B. They do not even have to think about how the project works in detail. It is just necessary to apply a small and simple change in the configuration, i.e., adding a line to the configuration data.

Neither more definitions nor great knowledge is needed. The task works completely automatically. This allows us to spend time on other work.

The motto of the project is “You need to transfer data? Go and do it easily”.

Value Brought by Dataiku:

Using the bulk load which is introduced by the Python recipes in each subflow makes the data transfer very fast and safe. If during one load step anything crashes, only the data of the current load is lost. The data transferred earlier can be used as a starting point when the transfer is restarted automatically.

Furthermore, we benefit from the direct connection to the target database (Snowflake in our case). No one must think about handling, extracting, or storing flat files.

If a scenario crashes, a mail reporting set up in Dataiku using AWS SES informs the user about the scenario that crashed as well as the type of error. If required, fast interaction is possible.

Value Type:

- Reduce cost

- Reduce risk

- Save time

- Increase trust