Sign up to take part

Registered users can ask their own questions, contribute to discussions, and be part of the Community!

Registered users can ask their own questions, contribute to discussions, and be part of the Community!

Hi,

I want to column names of a very wide table (500+ columns and 1 million rows).

The following are what I have attempted and they both failed with the exact same error message:

" Invalid argument

datasets = project.list_datasets()

# Returns a list of DSSDatasetListItem

for dataset in datasets:

# Quick access to main information in the dataset list item

print("Name: %s" % dataset.name)

print("Type: %s" % dataset.type)

print("Connection: %s" % dataset.connection)

print("Tags: %s" % dataset.tags) # Returns a list of strings

# You can also use the list item as a dict of all available dataset information

print("Raw: %s" % dataset)

identified the desired data set

dataset = project.get_dataset("target_df")

settings = dataset.get_settings()

# print out current column schema

for column in settings.schema_column:

print("Have column name=%s type=%s" % (column["name"], column["type"]))

curre_schema = settings.schema_columns

## make changes to the first column

curre_schema[0]['name'] = 'index_changed'

print(settings.schema_columns) #printed "index_changed"

#save new schema back into the dataset

settings.save()

When I went and check out the data table, in another window, I got the error mentioned above. Based on the error message, the schema is modified but I lost the entire data set doing this.

2. I followed this link as well:

# -*- coding: utf-8 -*-

import dataiku

import dataikuapi

import pandas as pd, numpy as np

from dataiku import pandasutils as pdu

# Read recipe inputs

target_dt = dataiku.Dataset("target_df")

target_dt_df = target_dt.get_dataframe()

#check column names in target_dt_df

target_dt_df.columns # first column is "index"

#change column name for the first column

target_dt_df.rename({'index':'index_changed'}, axis = 1, inplace = True)

# check to ensure the rename has been applied

target_dt_df.columns ## first column is now "index_changed"

#reduced dataframe size as I only care to change the schema

target_dt_df = target_dt_df.iloc[1:3]

#reflect the latest schema back to the original "target_df"

target_dt.write_schema_from_dataframe(target_dt_df)

I am really confused about why I am only able to change the schema locally but this cannot apply back into the Postgre SQL copy (which is what I really wanted).

Any help and insight is very much appreciated.

Thank you so much for your time and thoughts.

Regards,

KT

Hi @KT ,

I suspect that your Postgres table name is slightly different from the dataset name you see in DSS. By default the PROJECT_KEY is prepended to dataset names in table names in the underlying database. Sorry I didn't mention this earlier.

Can you double-check the table name by going to your dataset > Settings tab and verify the tablename:

Where ${projectKey} is a variable that resolves to the full project key. If it looks like this, then you would just need to adjust your SELECT statement to point to the full tablename:

"SELECT column_name FROM information_schema.columns WHERE table_name = 'YOURPROJECT_schema_df'"

Just in case you are curious, you can also pull this setting from the API:

Hopefully that resolves the empty array issue, let us know if not!

Thanks,

Sarina

Hi

in DSS a postgresql dataset is merely a "view" of the actual table in the DB. So changing column names in the dataset, be it in the UI or via the python API only change DSS's definition of the dataset, and are not magically translated into column modifications in the DB.

If your dataset is the output of some recipe in DSS, ie if DSS manages the dataset, then modifying the recipe producing the dataset to have the new column names, and re-running it, should be all that's needed.

If your dataset is an input to your flow, then you need to change the column names in the DB and re-sync the schema in the dataset's Settings > Connection tab. If you need to script the changes, you can leverage query_to_df() to issue "ALTER TABLE ... RENAME COLUMN ..." statements to the DB (despite the name, the method can run statements that return no data; just remember to add a COMMIT as post-query, since postgresql has transactional DDL)

Thank you very much for your insight. I assure you that I am not looking for any magical here, perhaps misguided by the Dataiku documentation.

If one follows the literal instruction as given by the documentation of Dataiku, it never mentioned that we are merely changing the view.

It states that "the schema of the dataframe is used to modify the schema of the output dataset". Perhaps this is something which I would insist for Dataiku to write the documentation more clearly and properly express the true behavior of these functions. I was able to modify the schema but I lost all the data due to this mismatch. If I lost the entire dataset after modifying the schema, what is the practical use case to justify this?

Regarding your proposal, the first option is still not practical, it is a very large data table and it does not make sense to load the entire table into the local system just to modify the table schema. I am simply interested in a solution where I can change the table schema or a very very large data set without loading the entire table.

I am very thankful that you pointed out about qurey_to_df(),I will have to take another day out of my work to give this a try as there are very limited documentation / examples for me to follow from what's currently available in Dataiku's documentation. Wish me luck!

Humbly,

@KT thank you for your feedback and we apologize for any frustration that may have been caused. I reached out to you directly with some more info. Hopefully that will begin to help find you a solution. Thanks again for your reply and utilizing the Dataiku Community.

Hi @KT,

To follow up on the query_to_df() example, here's an example of executing an ALTER TABLE statement with query_to_df(). I agree that it would be helpful to have additional examples in the documentation for more use cases.

I have a tiny table compared to your 500 columns, but just for a brief example, here's my original Postgres table:

sarina=# \d+ "MY_TABLE"

Table "public.MY_TABLE"

Column | Type | Collation | Nullable | Default | Storage | Stats target | Description

----------+------+-----------+----------+---------+----------+--------------+-------------

new_name | text | | | | extended | |

age | text | | | | extended | |

In a DSS Python notebook, I do the following, where my database connection is named "postgres":

Now in my database, if I check the table again:

sarina=# \d+ "MY_TABLE"

Table "public.DKU_HAIKU_STARTER_1_name_agenew_copy"

Column | Type | Collation | Nullable | Default | Storage | Stats target | Description

-------------+------+-----------+----------+---------+----------+--------------+-------------

better_name | text | | | | extended | |

better_age | text | | | | extended | |

If you could use any other help executing this for your use case, please let us know where you get stuck and we can take a look!

Thanks,

Sarina

Thank you for the example! It is clear about the overall structure in what the syntax and logic is. However, my dataset came out empty after executing the following code. If you can give me some pointers to debug this, it would be awesome!

# -*- coding: utf-8 -*-

import dataiku

import pandas as pd, numpy as np

from dataiku import pandasutils as pdu

from dataiku import SQLExecutor2

executor = SQLExecutor2(dataset = "schema_df")

sql_database = executor.query_to_df(

"SELECT column_name FROM information_schema.columns WHERE table_name = 'schema_df'"

)

original_names = list(list(sql_database.column_name))

print(original_names)

>> []

# check and verify dataset indeed has column names and schema stored

schema_df = dataiku.Dataset("schema_df")

schema_df_df = schema_summary_df.get_dataframe()

schema_df.columns

>> Index(['A', 'XXX', 'YYY', 'ZZZ', 'BAB'], dtype='object')/

Hi @KT ,

I suspect that your Postgres table name is slightly different from the dataset name you see in DSS. By default the PROJECT_KEY is prepended to dataset names in table names in the underlying database. Sorry I didn't mention this earlier.

Can you double-check the table name by going to your dataset > Settings tab and verify the tablename:

Where ${projectKey} is a variable that resolves to the full project key. If it looks like this, then you would just need to adjust your SELECT statement to point to the full tablename:

"SELECT column_name FROM information_schema.columns WHERE table_name = 'YOURPROJECT_schema_df'"

Just in case you are curious, you can also pull this setting from the API:

Hopefully that resolves the empty array issue, let us know if not!

Thanks,

Sarina

Hi @SarinaS Sarina,

Thank you so much for your quick response and incredible detail to resolve this issue with me!

This is awesome. It is a mismatch in the dataset name (for some reason, the displayed dataset name is capitalized but not when I view the name under setting as you pointed out!)

To follow up regarding your super helpful example code. Once I changed the column name using the code block you gave me, then I load the data set back and check the column name as below. The schema doesn't apply into the dataset.

# -*- coding: utf-8 -*-

import dataiku

import pandas as pd, numpy as np

from dataiku import pandasutils as pdu

from dataiku import SQLExecutor2

executor = SQLExecutor2(dataset = "schema_df")

sql_database = executor.query_to_df(

"SELECT column_name FROM information_schema.columns WHERE table_name = 'PROJECT_123_schema_df'"

)

original_names = list(list(sql_database.column_name))

print(original_names)

>>['A', 'XXX', 'YYY', 'ZZZ', 'BAB']

for name in original_names:

new_name = "better_"+name

alter_statement = 'ALTER TABLE "PROJECT_123_schema_df" rename column "' + name + '" to "' + new_name + '";'

executor.query_to_df(alter_statement, post_queries=["COMMIT"])

# check if new column names applied to the dataset

cur_df = dataiku.Dataset("schema_df")

cur_df_df = cur_df.get_dataframe()

cur_df_df.columns

>> ['A', 'XXX', 'YYY', 'ZZZ', 'BAB']

I went straight to the dataset in the "Flow" to check if the schema has been changed correctly. I noticed that in the "Explore" view, it was still listed with the old column name, then when I clicked on "Settings", I saw a warning

"Connection OK. Table exists, but schema does not match : Name mismatch for column 1 : 'A' in dataset, 'better_A' in table"

I have to manually click on "RELOAD SCHEMA FROM TABLE" and I can see the new column name applied. Is there a way to apply this command to reload the schema from the table within the Python code so that I can move forward with the latest column name inside the Python code block automatically?

Please let me know.

KT

Hi @KT ,

I'm glad to hear that this seems to be working for you!

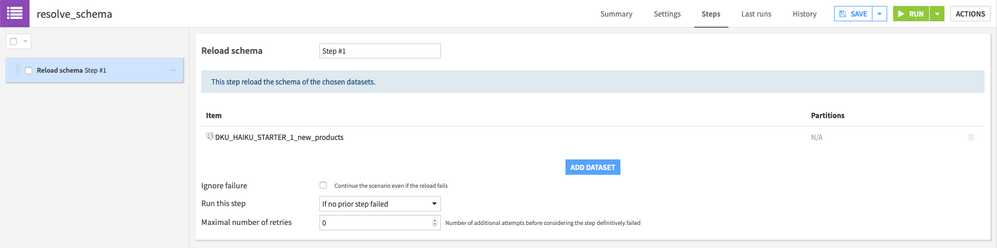

For the "Reload schema from table" question, there isn't quite an equivalent of this function in the Python API. As of DSS 9, there is a scenario step that you can use to automate the schema reload:

So one option would be to automate this through a scenario. You could also create a scenario similar to the one above, and then trigger that scenario through your Python code. To trigger an existing scenario that you created through the UI that has the "Reload schema from table" step you could do something like this:

import dataiku

import pandas as pd

client = dataiku.api_client()

project = client.get_project('YOUR_PROJECT')

scenario = project.get_scenario('YOUR_SCENARIO')

res = scenario.run_and_wait()

I hope that's helpful,

Sarina

Hi @SarinaS .

When you press "Reload schema from table", where is DSS getting that information from? I'm having this issue with a few parquet files, imported via the HDFS connection. A couple of them default to Excel format; when I change the format to parquet the data looks good but the schema is still wrong until I press "reload schema".

Is there a way to get the real schema (that DSS can somehow see, as the column headers are correct in the Explore view even before I reload schema) programmatically so that I can programmatically set the schema?

I'm on V9.

Hi @JH,

If you change the dataset format (i.e. from Excel format to Parquet), it will require clicking the "reload schema" button because of the format change from the original dataset format of Excel to Parquet format.

The main workaround for this is to change the dataset format to Parquet prior to creating the dataset. This will prevent the need for the "reload schema" step. It does look like there is currently an outstanding issue where Parquet datasets can be incorrectly detected as CSV/Excel format, which sounds like what you may be running into here. If ensuring that the dataset format is set to Parquet prior to dataset creation is not a good option, the best option for automating the "reload schema" step is probably to take the approach I outlined above and use the scenario step. To more fully automate this through the API, you can pull the scenario settings through the scenario.get_settings() API method and then update an existing step that performs "Reload schema" to point to a new dataset, so that you can change the step to a specific dataset and then run the scenario from Python.

Thanks,

Sarina

This is great @SarinaS ! I will definitely give this a try when the DSS system I am using gets upgraded to DSS 9 then! Thank you so much for your patience, explicit instructions and examples!