Sign up to take part

Registered users can ask their own questions, contribute to discussions, and be part of the Community!

Registered users can ask their own questions, contribute to discussions, and be part of the Community!

Hi everyone,

When working with Redshift tables in DSS visual recipes we noticed that the table creation settings sometimes defaults to setting certain column lengths to the redshift max (65,000). In many cases this becomes excessive. For example, in the screenshot below the "brand" column has a length of 65k but most of the column has text that span less than 10 characters.

We wanted to better understand the logic of column length setting defaults for Redshift and if there is a safe / proper way to modify this.

Hi,

When creating datasets from input Redshift (or other SQL databases), DSS will automatically fetch the column lengths from the Redshift table.

However, when creating a new Redshift dataset from columns which do not have a fixed length (as is the case for example when syncing from a CSV or Parquet file), DSS cannot know what the maximum size will be. It therefore takes a safe approach and defaults to setting up the maximum length possible for the database.

You can of course modify the length in the schema screen of the dataset.

DSS does not "simulate" the entire process in order to know what the maximum length may be, as it would be extremely slow and brittle.

We are looking into potential future improvements to make the default max length configurable. This will make it easier to work with the SQL databases (like Redshift) where the max length of varchars has a performance impact (it doesn't for most SQL databases)

Hi,

When creating datasets from input Redshift (or other SQL databases), DSS will automatically fetch the column lengths from the Redshift table.

However, when creating a new Redshift dataset from columns which do not have a fixed length (as is the case for example when syncing from a CSV or Parquet file), DSS cannot know what the maximum size will be. It therefore takes a safe approach and defaults to setting up the maximum length possible for the database.

You can of course modify the length in the schema screen of the dataset.

DSS does not "simulate" the entire process in order to know what the maximum length may be, as it would be extremely slow and brittle.

We are looking into potential future improvements to make the default max length configurable. This will make it easier to work with the SQL databases (like Redshift) where the max length of varchars has a performance impact (it doesn't for most SQL databases)

Hello @Clément_Stenac ,

was there any progress on this improvement that you mention? I am regularly running into a similar issue with MySQL db, where it defaults to varchar(500) which is far from sufficient for the text data I work with. Then I have to manually edit the create table statement to text or longtext for it to work. Is there any DSS setting that would allow me to use text instead of varchar(500) by default?

Is there a ticket or development request that I could raise which would change the schema from varchar(500) to longtext whenever the job fails because of the Data too long error for a given field in the table?

Apparently, DSS is aware of the error type and also the field name, so from my point of view it should be able to adjust the schema by itself and load the data again without human intervention

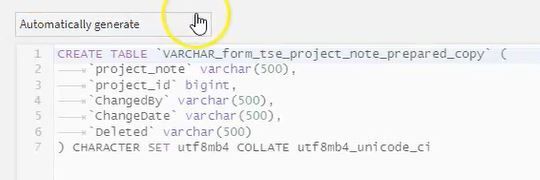

before - default behavior today:

you get an error:

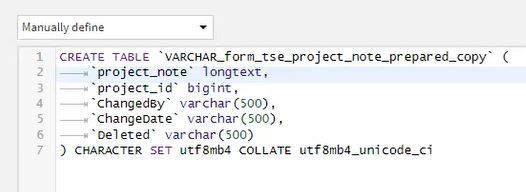

schema should be adjusted:

run it again and it works, Job succeeded

Hello, can anyone confirm that this is the only place where we're able to change the max length of a string column?

Hi, correct, here or in the advanced tab inside the create table statement

I am also facing this issue. It would be nice to have some options other than having to manually define the table creation script.

I also found that even though I manually set the schema for a dataset using the json fields, it gets overwritten when writing a python dataset. If I manually define the schema creation script then I get load errors: Load into table failed. Check 'stl_load_errors' system table for details - that table appears blank to me.